Training

Module

Add Azure AI services to your mixed reality project - Training

This course explores the use of Azure speech services by integrating to a hololens2 application. You can also deploy your project to a HoloLens.

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Voice input in Unreal allows you to interact with a hologram without having to use hand gestures and is only supported HoloLens 2. Voice input on HoloLens 2 is powered by the same engine that supports speech in all other Universal Windows Apps, but Unreal uses a more limited engine to process voice input. This limits voice input features in Unreal to predefined speech mappings, which are covered in the following sections.

If you use Windows Mixed Reality Plugin, Voice input doesn’t require any special Windows Mixed Reality APIs; it's built on the existing Unreal Engine 4 Input mapping API. If you use OpenXR, you should additionally install Microsoft OpenXR plugin.

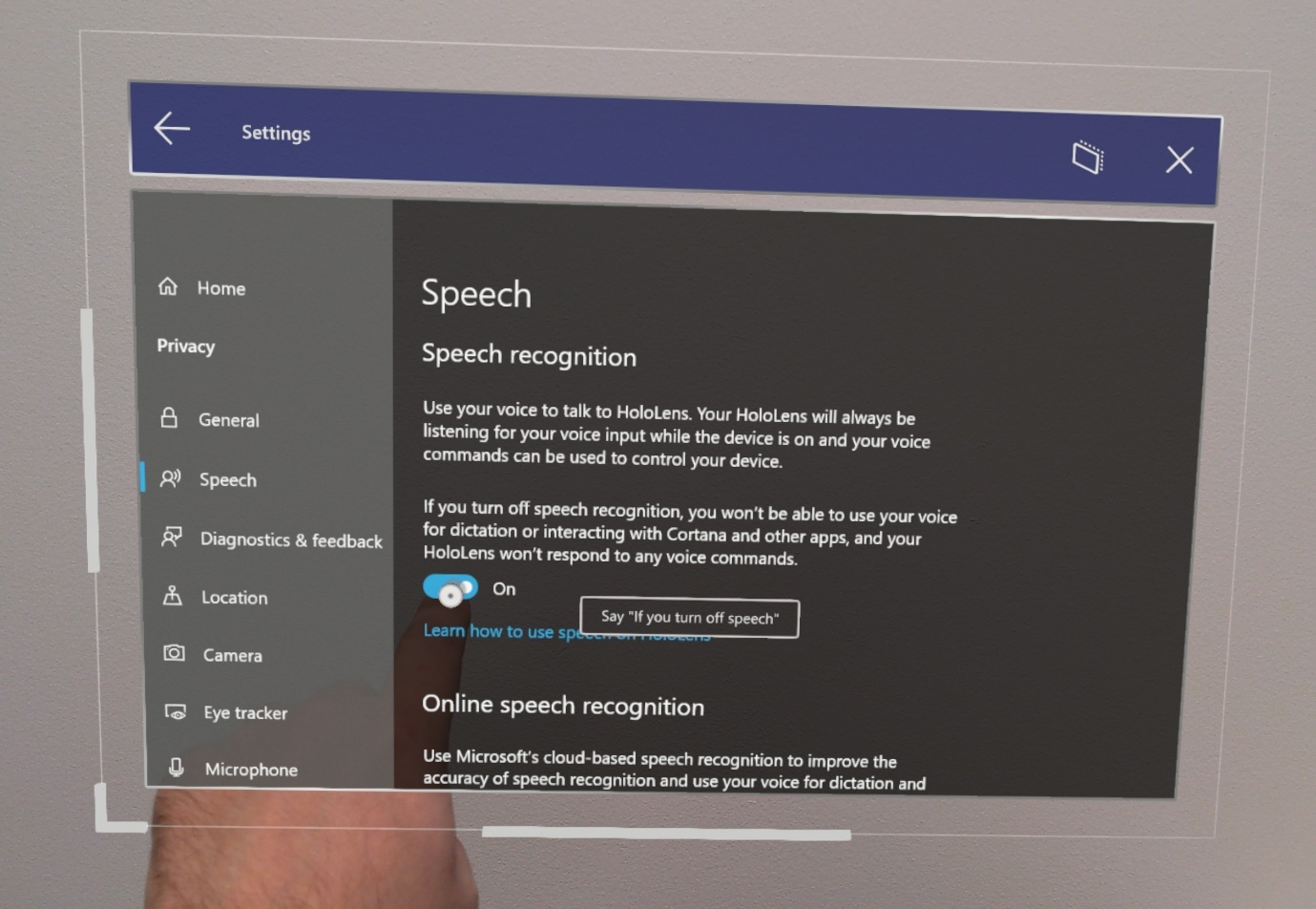

To enable speech recognition on HoloLens:

Note

Speech recognition always functions in the Windows display language configured in the Settings app. It’s recommended that you also enable Online speech recognition for the best service quality.

Connecting speech to action is an important step when using voice input. These mappings monitor the app for speech keywords that a user might say, then fire off a linked action. You can find Speech Mappings by:

To add a new Speech Mapping for a jump command:

Note

Any English word(s) or short sentence(s) can be used as a keyword.

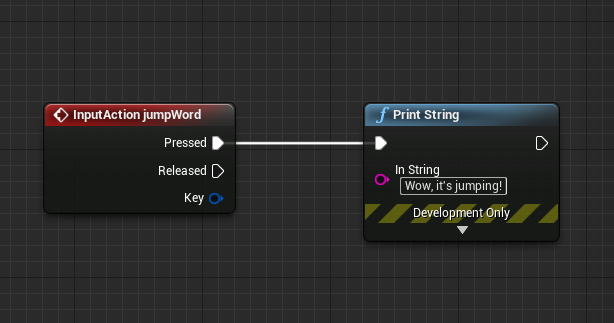

Speech Mappings can be used as Input components like Action or Axis Mappings or as blueprint nodes in the Event Graph. For example, you could link the jump command to print out two different logs depending on when the word is spoken:

That's all the setup you'll need to start adding voice input to your HoloLens apps in Unreal. You can find more information on speech and interactivity at the links below, and be sure to think about the experience you're creating for your users.

If you're following the Unreal development journey we've laid out, you're next task is exploring the Mixed Reality platform capabilities and APIs:

You can always go back to the Unreal development checkpoints at any time.

Training

Module

Add Azure AI services to your mixed reality project - Training

This course explores the use of Azure speech services by integrating to a hololens2 application. You can also deploy your project to a HoloLens.