Примечание

Для доступа к этой странице требуется авторизация. Вы можете попробовать войти или изменить каталоги.

Для доступа к этой странице требуется авторизация. Вы можете попробовать изменить каталоги.

This article provides details regarding how data provided by you to the Azure OpenAI service is processed, used, and stored. Azure OpenAI stores and processes data to provide the service and to monitor for uses that violate the applicable product terms. Please also see the Microsoft Products and Services Data Protection Addendum, which governs data processing by the Azure OpenAI Service. Azure OpenAI is an Azure service; learn more about applicable Azure compliance offerings.

Important

Your prompts (inputs) and completions (outputs), your embeddings, and your training data:

- are NOT available to other customers.

- are NOT available to OpenAI.

- are NOT used to improve OpenAI models.

- are NOT used to train, retrain, or improve Azure OpenAI Service foundation models.

- are NOT used to improve any Microsoft or 3rd party products or services without your permission or instruction.

- Your fine-tuned Azure OpenAI models are available exclusively for your use.

The Azure OpenAI Service is operated by Microsoft as an Azure service; Microsoft hosts the OpenAI models in Microsoft's Azure environment and the Service does NOT interact with any services operated by OpenAI (e.g. ChatGPT, or the OpenAI API).

What data does the Azure OpenAI Service process?

Azure OpenAI processes the following types of data:

- Prompts and generated content. Prompts are submitted by the user, and content is generated by the service, via the completions, chat completions, images, and embeddings operations.

- Uploaded data. You can provide your own data for use with certain service features (e.g., fine-tuning, assistants API, batch processing) using the Files API or vector store.

- Data for stateful entities. When you use certain optional features of Azure OpenAI service, such as the Threads feature of the Assistants API and Stored completions, the service will create a data store to persist message history and other content, in accordance with how you configure the feature.

- Augmented data included with or via prompts. When using data associated with stateful entities, the service retrieves relevant data from a configured data store and augments the prompt to produce generations that are grounded with your data. Prompts may also be augmented with data retrieved from a source included in the prompt itself, such as a URL.

- Training & validation data. You can provide your own training data consisting of prompt-completion pairs for the purposes of fine-tuning an OpenAI model.

How does the Azure OpenAI Service process data?

The diagram below illustrates how your data is processed. This diagram covers several types of processing:

- How the Azure OpenAI Service processes your prompts via inferencing to generate content (including when additional data from a designated data source is added to a prompt using Azure OpenAI on your data, Assistants, or batch processing).

- How the Assistants feature stores data in connection with Messages, Threads, and Runs.

- How the Batch feature processes your uploaded data.

- How the Azure OpenAI Service creates a fine-tuned (custom) model with your uploaded data.

- How the Azure OpenAI Service and Microsoft personnel analyze prompts and completions (text and image) for harmful content and for patterns suggesting the use of the service in a manner that violates the Code of Conduct or other applicable product terms.

As depicted in the diagram above, managed customers may apply to modify abuse monitoring.

Generating completions, images or embeddings through inferencing

Models (base or fine-tuned) deployed in your resource process your input prompts and generate responses with text, images, or embeddings. Customer interactions with the model are logically isolated and secured employing technical measures including but not limited to transport encryption of TLS1.2 or higher, compute security perimeter, tokenization of text, and exclusive access to allocated GPU memory. Prompts and completions are evaluated in real time for harmful content types and content generation is filtered based on configured thresholds. Learn more at Azure OpenAI Service content filtering.

Prompts and responses are processed within the customer-specified geography (unless you are using a Global deployment type), but may be processed between regions within the geography for operational purposes (including performance and capacity management). See below for information about location of processing when using a Global deployment type.

The models are stateless: no prompts or generations are stored in the model. Additionally, prompts and generations are not used to train, retrain, or improve the base models.

Understanding location of processing for "Global" and "Data zone" deployment types

In addition to standard deployments, Azure OpenAI Service offers deployment options labelled as 'Global' and 'DataZone.' For any deployment type labeled 'Global,' prompts and responses may be processed in any geography where the relevant Azure OpenAI model is deployed (learn more about region availability of models). For any deployment type labeled as 'DataZone,' prompts and responses may be processed in any geography within the specified data zone, as defined by Microsoft. If you create a DataZone deployment in an Azure OpenAI resource located in the United States, prompts and responses may be processed anywhere within the United States. If you create a DataZone deployment in an Azure OpenAI resource located in a European Union Member Nation, prompts and responses may be processed in that or any other European Union Member Nation. For both Global and DataZone deployment types, any data stored at rest, such as uploaded data, is stored in the customer-designated geography. Only the location of processing is affected when a customer uses a Global deployment type or DataZone deployment type in Azure OpenAI Service; Azure data processing and compliance commitments remain applicable.

Augmenting prompts to "ground" generated results "on your data"

The Azure OpenAI "on your data" feature lets you connect data sources to ground the generated results with your data. The data remains stored in the data source and location you designate; Azure OpenAI Service does not create a duplicate data store. When a user prompt is received, the service retrieves relevant data from the connected data source and augments the prompt. The model processes this augmented prompt and the generated content is returned as described above. Learn more about how to use the On Your Data feature securely.

Data storage for Azure OpenAI Service features

Some Azure OpenAI Service features store data in the service. This data is either uploaded by the customer, using the Files API or vector store, or is automatically stored in connection with certain stateful entities such as the Threads feature of the Assistants API and Stored completions. Data stored for Azure OpenAI Service features:

- Is stored at rest in the Azure OpenAI resource in the customer's Azure tenant, within the same geography as the Azure OpenAI resource;

- Can be double encrypted at rest, by default with Microsoft's AES-256 encryption and optionally with a customer managed key (except preview features may not support customer managed keys);

- Can be deleted by the customer at any time.

Note

Azure OpenAI features in preview might not support all of the above conditions.

Stored data may be used with the following service features/capabilities:

- Creating a customized (fine-tuned) model. Learn more about how fine-tuning works. Fine-tuned models are exclusively available to the customer whose data was used to create the fine-tuned model, are encrypted at rest (when not deployed for inferencing), and can be deleted by the customer at any time. Training data uploaded for fine-tuning is not used to train, retrain, or improve any Microsoft or 3rd party base models.

- Batch processing. Learn more about how batch processing works. Batch processing is a Global deployment type; data stored at rest remains in the designated Azure geography until processing capacity becomes available; processing may occur in any geography where the relevant Azure OpenAI model is deployed (learn more about region availability of models).

- Assistants API (preview). Learn more about how the Assistants API works. Some features of Assistants, such as Threads, store message history and other content.

- Stored completions (preview). Stored completions stores input-output pairs from the customer’s deployed Azure OpenAI models such as GPT-4o through the chat completions API and displays the pairs in the Azure AI Foundry portal. This allows customers to build datasets with their production data, which can then be used for evaluating or fine-tuning models (as permitted in applicable Product Terms).

Preventing abuse and harmful content generation

To reduce the risk of harmful use of the Azure OpenAI Service, the Azure OpenAI Service includes both content filtering and abuse monitoring features. To learn more about content filtering, see Azure OpenAI Service content filtering. To learn more about abuse monitoring, see abuse monitoring.

Content filtering occurs synchronously as the service processes prompts to generate content as described above and here. No prompts or generated content are stored in the content classifier models, and prompts and outputs are not used to train, retrain, or improve the classifier models without your consent.

Safety evaluations of fine-tuned models evaluate a fine-tuned model for potentially harmful responses using Azure’s risk and safety metrics. Only the resulting assessment (deployable or not deployable) is logged by the service.

Azure OpenAI abuse monitoring system is designed to detect and mitigate instances of recurring content and/or behaviors that suggest use of the service in a manner that may violate the code of conduct or other applicable product terms. As described here, the system employs algorithms and heuristics to detect indicators of potential abuse. When these indicators are detected, a sample of customer’s prompts and completions may be selected for review. Review is conducted by LLM by default, with additional reviews by human reviewers as necessary. Detailed information about AI and human review is available at Azure OpenAI Service abuse monitoring.

For AI review, customer’s prompts and completions are not stored by the system or used to train the LLM or other systems. For human review, the data store where prompts and completions are stored is logically separated by customer resource (each request includes the resource ID of the customer’s Azure OpenAI resource). A separate data store is located in each geography in which the Azure OpenAI Service is available, and a customer’s prompts and generated content are stored in the Azure geography where the customer’s Azure OpenAI service resource is deployed, within the Azure OpenAI service boundary. Human reviewers assessing potential abuse can access prompts and completions data only when that data has already been flagged by the abuse monitoring system. The human reviewers are authorized Microsoft employees who access the data via point wise queries using request IDs, Secure Access Workstations (SAWs), and Just-In-Time (JIT) request approval granted by team managers. For Azure OpenAI Service deployed in the European Economic Area, the authorized Microsoft employees are located in the European Economic Area.

If the customer has been approved for modified abuse monitoring (learn more at Azure OpenAI Service abuse monitoring), Microsoft does not store the prompts and completions associated with the approved Azure subscription(s), and the human review process described above is not possible and is not performed. However, AI review may still be conducted, leveraging LLMs that review prompts and completions at the time provided or generated, as applicable.

Note

Azure Preview features, including Azure OpenAI models in preview, may employ different privacy practices, including with respect to abuse monitoring. Previews may be subject to supplemental terms at: Supplemental Terms of use for Microsoft Azure Previews.

How can a customer verify if data storage for abuse monitoring is off?

There are two ways for customers, once approved to turn off abuse monitoring, to verify that data storage for abuse monitoring has been turned off in their approved Azure subscription:

- Using the Azure portal, or

- Azure CLI (or any management API).

Note

The value of "false" for the "ContentLogging" attribute appears only if data storage for abuse monitoring is turned off. Otherwise, this property will not appear in either Azure portal or Azure CLI's output.

Prerequisites

- Sign into Azure

- Select the Azure Subscription which hosts the Azure OpenAI Service resource.

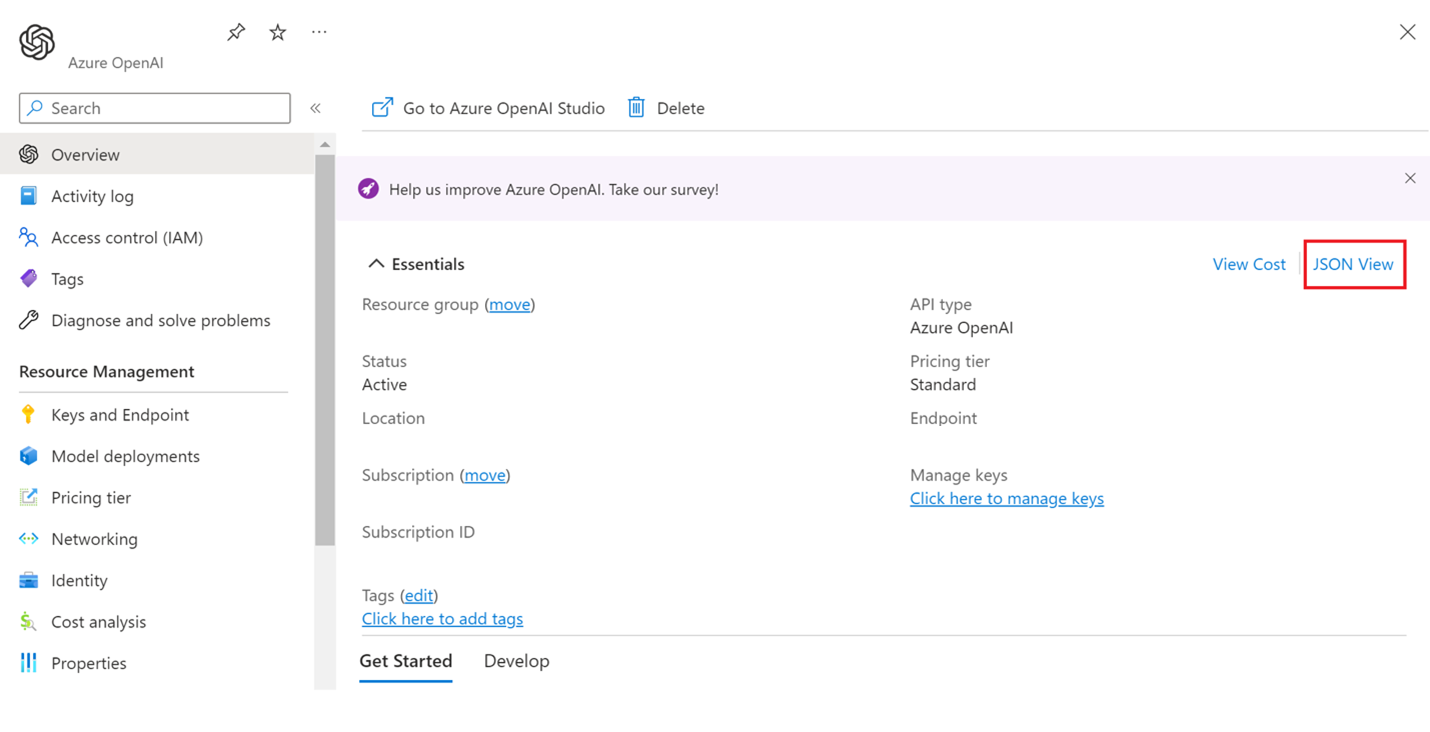

- Navigate to the Overview page of the Azure OpenAI Service resource.

Go to the resource Overview page

Click on the JSON view link on the top right corner as shown in the image below.

There will be a value in the Capabilities list called "ContentLogging" which will appear and be set to FALSE when logging for abuse monitoring is off.

{

"name":"ContentLogging",

"value":"false"

}

To learn more about Microsoft's privacy and security commitments see the Microsoft Trust Center.

Change log

| Date | Changes |

|---|---|

| 17 December 2024 | Added information about data processing and storage in connection with new Stored completions feature; added language clarifying that Azure OpenAI features in preview may not support all data storage conditions; removed "preview" designation for Batch processing |

| 18 November 2024 | Added information about location of data processing for new ‘Data zone’ deployment types; added information about new AI review of prompts and completions as part of preventing abuse and generation of harmful content |

| 4 September 2024 | Added information (and revised existing text accordingly) about data processing for new features including Assistants API (preview), Batch (preview), and Global Deployments; revised language related to location of data processing, in accordance with Azure data residency principles; added information about data processing for safety evaluations of fine-tuned models; clarified commitments related to use of prompts and completions; minor revisions to improve clarity |

| 23 June 2023 | Added information about data processing for new Azure on your data feature; removed information about abuse monitoring which is now available at Azure OpenAI Service abuse monitoring. Added summary note. Updated and streamlined content and updated diagrams for additional clarity. added change log |

See also

- Code of conduct for Azure OpenAI Service integrations

- Overview of Responsible AI practices for Azure OpenAI models

- Transparency note and use cases for Azure OpenAI Service

- Data Residency in Azure

- Compare Azure OpenAI in Azure Government

- Limited access to Azure OpenAI Service

- Report abuse of Azure OpenAI Service through the Report Abuse Portal

- Report problematic to [email protected]