Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Microsoft is committed to empowering customers to confidently adopt and build Artificial Intelligence (AI) solutions by using its cloud services. As AI continues to evolve in a rapidly developing and largely unregulated landscape, Microsoft takes a thoughtful and proactive approach to ensure its AI technologies are safe, secure, and trustworthy. Microsoft's approach to AI centers on comprehensive offerings, strong governance frameworks, and well-defined Responsible AI (RAI) principles, all supported by a shared responsibility model. Through this foundation, Microsoft enables customers to design and deploy AI systems that align with established security, privacy, and ethical standards, fostering innovation while maintaining trust.

Which product offerings from Microsoft utilize AI?

Microsoft has a variety of AI service offerings that you can broadly categorize into two groups: ready-to-use generative AI systems such as Copilot, and AI development platforms where you can build your own generative AI systems.

Azure AI serves as the common development platform, providing a foundation for responsible innovation with enterprise-grade privacy, security, and compliance.

For more information on Microsoft’s AI offerings, see the following product documentation:

How does the shared responsibility model apply to AI?

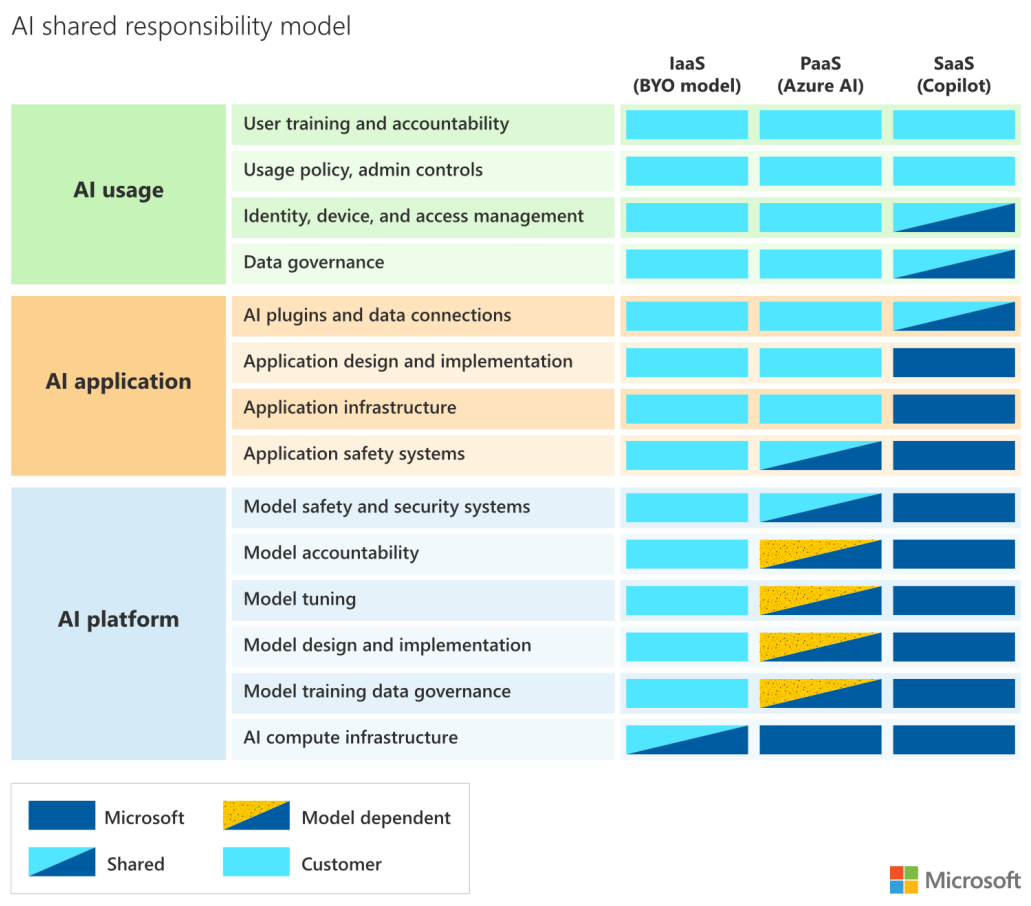

As with other cloud offerings, mitigation of AI risks is a shared responsibility between Microsoft and customers. Depending on the AI capabilities you implement within your organization, responsibilities shift between Microsoft and you. This model varies depending on the type of AI deployment—whether it's a fully managed SaaS solution like Microsoft 365 Copilot or a custom model deployment using Azure OpenAI.

The following diagram illustrates the areas of responsibility between Microsoft and customers according to the type of deployment.

For more information, see Artificial Intelligence (AI) shared responsibility model

Microsoft responsibilities

Microsoft is responsible for delivering secure, compliant, and trustworthy AI infrastructure and services across its cloud platforms, including IaaS, PaaS, and SaaS models. Microsoft embeds Responsible AI principles into its development lifecycle by following the Responsible AI Standard. This standard ensures fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability by design.

In PaaS and SaaS scenarios, Microsoft manages the AI compute infrastructure, model training environments, and application hosting platforms. Microsoft applies consistent security practices under the Security Development Lifecycle (SDL). These practices include threat modeling, secure code analysis, and penetration testing. Microsoft also implements model safety systems that use techniques like retrieval-augmented generation (RAG), metaprompt engineering, and abuse detection to reduce hallucinations and improve factual grounding.

Privacy and data protection are foundational. Microsoft doesn't use customer data to train foundational models without explicit consent. Microsoft governs all data interactions by its Data Protection Addendum and Product Terms. Additionally, Microsoft ensures transparency and explainability by providing comprehensive documentation, responsible use disclaimers, and technical resources to help customers understand the behavior and limitations of AI services.

For SaaS offerings, such as Microsoft Copilot, Microsoft assumes operational responsibility for the full application stack. This responsibility includes model lifecycle management, application infrastructure, plugin governance, and safety systems. For PaaS models like Azure AI, Microsoft shares responsibility with the customer for model design, tuning, and integration. Microsoft continues to operate and secure the underlying platform services. For more information on IaaS, PaaS, and SaaS, see Use platform as a service (PaaS) options and Saas and Multitenant Solution Architecture.

Customer responsibilities

Customers are responsible for managing how AI is used within their organization. This responsibility includes defining policies, configuring environments, and enabling controls to ensure AI systems align with business goals and regulatory requirements.

In all deployment models, customers must establish AI governance and oversight mechanisms, such as usage policies, review processes, and responsible AI training. Customers are also responsible for identity, device, and access management. They ensure that appropriate role-based access controls and authentication methods are in place. They must implement data governance frameworks to classify, protect, and manage the lifecycle of their data assets.

For IaaS and PaaS scenarios, customers own the design and implementation of AI models and applications. This ownership includes model selection, prompt engineering, fine-tuning, and integration with business systems. Customers are also accountable for AI plugin configurations, application safety systems, and infrastructure management if applicable.

Even when using SaaS-based AI solutions, customers remain responsible for user training and education, configuring usage policies, and ensuring outputs are reviewed and used appropriately. Customers must map AI usage to applicable compliance requirements, such as GDPR, HIPAA, or the EU AI Act. They must establish internal policies that reflect their organizational values and risk tolerance.

Customers must take proactive steps to mitigate misuse, validate AI-generated outputs, and foster a culture of responsible AI adoption across teams.

For information on how Microsoft supports customers in carrying out their portion of the shared responsibility model, see How we support our customers in building AI responsibly (pg. 38).

How does Microsoft govern the development and management of AI systems?

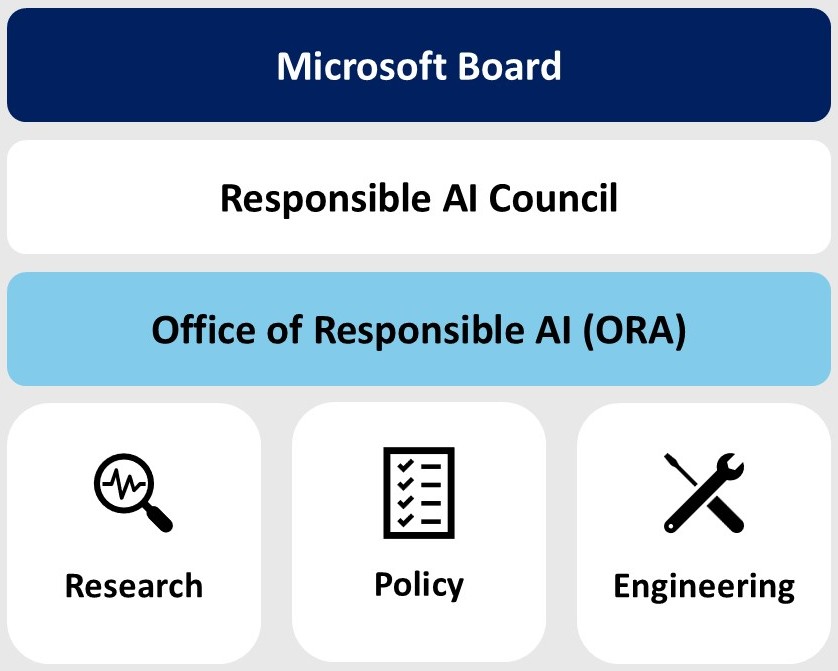

No one team or organization is solely responsible for driving the adoption of responsible AI practices. We combine a federated, bottom-up approach with strong top-down support and oversight by company leadership to fuel our policies, governance, and processes. Specialists in research, policy, and engineering combine their expertise and collaborate on cutting-edge practices to ensure we meet our own commitments while simultaneously supporting our customers and partners.

Responsible AI Standard

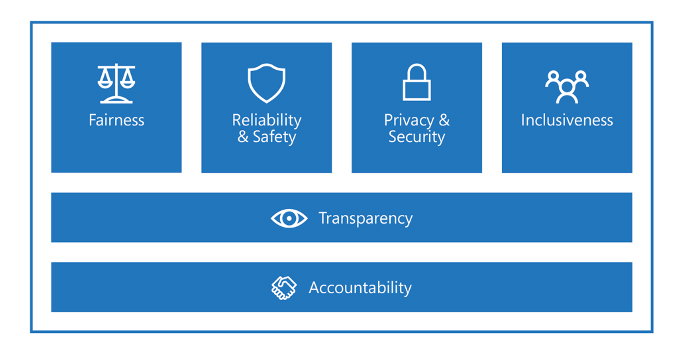

- Responsible AI Policy: Microsoft's Responsible AI policy is grounded in six core principles: fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. These principles guide the design, development, and deployment of AI systems to ensure they're ethical, trustworthy, and human-centered.

- Responsible AI Standard: Putting responsible AI into practice begins with Microsoft's Responsible AI Standard. The Standard details how to integrate responsible AI into engineering teams, the AI development lifecycle, and tooling. The goal is to mitigate risks, uphold human rights, and ensure AI technologies benefit society broadly. For more details on Responsible AI at Microsoft, see here.

Roles and responsibilities

- Microsoft Board: Our management of responsible AI starts with CEO Satya Nadella and cascades across the senior leadership team and all of Microsoft. The Environmental, Social, and Public Policy Committee of the Board of Directors provides oversight and guidance on responsible AI policies and programs.

- Responsible AI Council: Co-led by Vice Chair and President Brad Smith and Chief Technology Officer Kevin Scott, the Responsible AI Council provides a forum for business leaders and representatives from research, policy, and engineering to regularly grapple with the biggest challenges surrounding AI and to drive progress in our responsible AI policies and processes.

- Office of Responsible AI (ORA): ORA is tasked with putting responsible AI principles into practice, which it accomplishes through five key functions. ORA sets company-wide internal policies, defines governance structures, provides resources to adopt AI practices, reviews sensitive use cases, and helps shape public policy around AI.

- Research: Researchers in the AI Ethics and Effects in Engineering and Research (Aether) Committee, Microsoft Research, and engineering teams keep the responsible AI program on the leading edge of issues through thought leadership.

- Policy: ORA collaborates with stakeholders and policy teams across the company to develop policies and practices to uphold our AI principles when building AI applications.

- Engineering: Engineering teams create AI platforms, applications, and tools. They provide feedback to ensure policies and practices are technically feasible, innovate novel practices and new technologies, and scale responsible AI practices throughout the company.

What principles serve as the foundation for Microsoft's RAI program?

Putting responsible AI into practice begins with our Responsible AI Standard. The Standard details how to integrate responsible AI into engineering teams, the AI development lifecycle, and tooling. The RAI Standard covers six domains and establishes 14 goals that are intended to reduce AI risks and their associated harms. Each goal in the RAI Standard is composed of requirements – concrete steps for teams to build AI systems in accordance with the domains.

- Accountability: We're accountable for how our technology operates and affects the world.

- Transparency: We're open about how and why we build AI systems, what their limitations are, and how the system makes decisions.

- Fairness: We make sure AI systems treat everyone fairly and avoid affecting similarly situated groups of people in different ways.

- Reliability and Safety: We make sure AI systems operate as they were originally designed, respond safely to unanticipated conditions, and resist harmful manipulation.

- Privacy and Security: We ensure data and models are protected and individuals' fundamental right to privacy is preserved.

- Inclusiveness: We intentionally make sure we include the full spectrum of communities.

For more information on the Responsible AI principles, see Responsible AI

How does Microsoft implement the policies defined by ORA?

The Responsible AI Standard (RAI Standard) reflects Microsoft's long-term effort to establish product development and risk management criteria for AI systems. It puts the RAI principles into action by defining goals, requirements, and practices for all AI systems developed by Microsoft.

- Goals: Define what it means to uphold our AI principles

- Requirements: Define how we must uphold our AI principles

- Practices: The aids that help satisfy the requirements

The main driving force in the RAI Standard is the Impact Assessment, where the developing team documents outcomes for each goal requirement. For the Privacy & Security and Inclusiveness goals, the RAI Standard states the requirement to comply with the existing privacy, security, and accessibility programs that already exist at Microsoft and to follow any AI specific guidance they provide.

You can find the full RAI Standard in the Microsoft Responsible AI Standard general requirements documentation.

What is covered under the Product Terms?

The Microsoft Product Terms define the rights, responsibilities, and conditions under which customers can use Microsoft Online Services, including AI-enabled features such as Microsoft 365 Copilot. These terms form a foundational part of Microsoft’s compliance and contractual commitments. They apply to all Core Online Services, as outlined in the Microsoft licensing documentation.

Specifically, the Product Terms cover the following areas:

- Customer Data Usage: Microsoft acts as a data processor and handles Customer Data strictly in accordance with the terms set forth in the Product Terms and the Microsoft Products and Services Data Protection Addendum (DPA). Microsoft processes Customer Data only to provide the services and never uses it to train foundation models without explicit permission.

- Acceptable Use Requirements: Customers must comply with Microsoft's Acceptable Use Policy, which the Product Terms incorporate. This policy includes restrictions on misuse of AI features. For example, customers can't intentionally circumvent safety filters or attempt to manipulate metaprompts in Microsoft 365 Copilot.

- Shared Responsibility Model: The Product Terms clarify the division of operational and compliance responsibilities between Microsoft and the customer. For Software as a Service (SaaS) solutions like Copilot, Microsoft manages the infrastructure, model operations, and embedded safety systems. Customers are responsible for appropriate use, access controls, and user education.

- Service Coverage: The Product Terms list all covered services—referred to as Core Online Services—and define how each service aligns with Microsoft’s privacy, security, and compliance commitments.

- Transparency and Trust: The Product Terms work in conjunction with resources like the Microsoft Trust Center, Service Trust Portal, and Compliance Manager to provide visibility into how Microsoft designs, secures, and operates its services, including those enhanced by AI.

Microsoft makes these terms publicly available and updates them regularly to reflect evolving technology, regulatory requirements, and internal policies, including the Responsible AI Standard (RAIS). Customers should review the Product Terms periodically to stay informed about service use rights and obligations.

What does the lifecycle of a Microsoft AI system look like?

Microsoft engineering teams implement a range of technical safeguards to ensure AI systems are secure, reliable, and aligned with our Responsible AI standards. This approach includes proactively identifying and mitigating risks related to fairness, safety, reliability, privacy, inclusiveness, and transparency. The AI development cycle follows an iterative, risk-focused framework designed to ensure that generative AI applications are built and deployed responsibly. This lifecycle is built around four core functions that align with the NIST AI Risk Management Framework. Together, these phases guide teams through responsible innovation and risk mitigation. For more information about the development cycle and Responsible AI, see Responsible AI.

Govern: The govern phase lays the foundation for responsible AI by establishing the roles, responsibilities, and policies that guide the development and deployment of AI systems. At Microsoft, this phase begins with the Responsible AI Standard, which ensures that AI development aligns with the company’s core principles, including fairness, accountability, and transparency. Governance includes developing and updating policies informed by evolving regulations and stakeholder input, conducting pre-deployment reviews, and requiring transparency materials to help users understand AI capabilities and limitations. Cross-functional collaboration is also emphasized, ensuring that legal, engineering, and policy experts work together to oversee AI projects effectively.

Map: Mapping refers to identifying and prioritizing the risks associated with AI systems. Microsoft starts this phase with a Responsible AI Impact Assessment to evaluate the potential for harm and determine appropriate mitigations. Privacy and security are reviewed using tools like threat modeling, while AI red teaming is employed to simulate adversarial or misuse scenarios that might expose vulnerabilities. This stage helps Microsoft understand how risks might manifest in practice and ensures systems are assessed holistically, from the underlying models to the full application environment.

Measure: Once risks are identified, the measure phase focuses on systematically evaluating them using defined metrics. This evaluation includes assessing the likelihood of harmful content being generated, the effectiveness of safety mitigations, and the performance of AI outputs in areas such as groundedness (factual alignment with source data), relevance, and content safety. Microsoft uses tools integrated into Azure AI Studio, such as safety evaluations and adversarial test datasets, to monitor outputs. These evaluations help teams make informed decisions and refine AI behavior to ensure outputs meet responsible use standards.

Manage: The manage phase involves implementing mitigations and continuously monitoring AI systems throughout their lifecycle. Risk management occurs at both the platform and application levels. For instance, model-level adjustments might include fine-tuning or deploying content filters, while application-level strategies focus on grounding outputs, designing intuitive user interfaces, and including in-product disclosures to promote transparency. Microsoft also uses staged rollouts and ongoing monitoring systems to detect performance issues, collect feedback, and respond to incidents. Tools like Prompt Shield are deployed to defend against jailbreak attempts, and Content Credentials help label and track AI-generated content to ensure provenance and trustworthiness.

Pre-deployment reviews and sensitive use counseling

Microsoft introduced an internal workflow tool that centralizes responsible AI requirements and simplifies documentation for pre-deployment reviews. For high-impact or sensitive use cases, such as those involving biometric data or critical infrastructure, Microsoft provides hands-on counseling to ensure heightened scrutiny and ethical alignment.

Continuous learning and adaptation

The review process isn't static. Microsoft continuously evolves its practices based on:

- Advances in AI capabilities

- Feedback from customers, regulators, and civil society

- Real-world deployment experiences

This iterative approach ensures that Microsoft’s AI systems remain trustworthy, even as the technology and regulatory landscape evolves.

Link to the Transparency report: 2025 Responsible AI Transparency Report

What is Copilot and how does it work?

Microsoft Copilot is a family of AI-powered assistants integrated across Microsoft 365, Dynamics 365, GitHub, and other platforms. These Copilots enhance productivity, automate tasks, and support decision-making—all while operating within Microsoft’s enterprise-grade security, compliance, and privacy boundaries.

Copilot products

Microsoft offers several types of Copilot, each tailored to specific environments:

- Microsoft 365 Copilot: Embedded in apps like Word, Excel, Outlook, and Teams to assist with content generation, summarization, and task automation.

- Copilot for Dynamics 365: Supports business processes like finance, sales, and customer service by surfacing insights and automating workflows.

- GitHub Copilot: Assists developers by suggesting code and documentation within IDEs.

- Security Copilot: Helps security analysts investigate threats and respond to incidents using natural language queries.

- Copilot Studio: Enables organizations to build custom copilots using low-code tools and integrate them with Microsoft Graph and Azure OpenAI.

Copilot process

Copilot operates through a multistep process:

- Input: The user enters a prompt (for example, “Summarize this email thread”).

- Grounding: The system retrieves relevant enterprise data by using Microsoft Graph, SharePoint, or other connectors.

- Processing: The system sends the prompt and grounded data to an LLM (such as GPT-4) hosted in Azure OpenAI or another Microsoft-controlled environment.

- Response: The model generates a response and returns it to the user within the application context.

- Post-processing: Microsoft applies safety filters, content moderation, and formatting before displaying the output.

This architecture ensures that Copilot responses are contextually relevant, secure, and aligned with enterprise data governance policies.

What data does Copilot access?

Copilot only accesses data that you're already authorized to view. This data includes:

- Emails, documents, and chats in Microsoft 365

- CRM records in Dynamics 365

- Code repositories in GitHub

- Security logs in Microsoft Sentinel (for Security Copilot)

Microsoft Entra ID governs access, and all data access is scoped to your permissions. Copilot doesn't override existing access controls or expose unauthorized content. For more information on AI Security for Microsoft 365 Copilot, see AI security for Microsoft 365 Copilot.

Copilot for Microsoft 365 doesn't use customer data to train foundation models, as indicated in our commitments. For reference, see the Microsoft Product Terms. Copilot for Microsoft 365 uses OpenAI provided foundation models. The foundation models used by Copilot for Microsoft 365 don't learn dynamically based on usage. During development of these foundation models, OpenAI maintains high standards for data anonymization prior to training models. OpenAI continues to improve these systems iteratively and maintain quality control through human training data review to remove false negatives.

For more information on controls Microsoft 365 implements to meet our responsibilities, obligations, and commitments for Microsoft 365 Copilot, see the Service Trust Portal.

Copilot data storage

Copilot interactions are stored within the user’s Exchange Online mailbox. This data includes:

- Prompt and response pairs

- Metadata for auditing and compliance

- Logs for Copilot Safety Data (CSD) analysis

Microsoft Purview policies manage retention:

- Default retention: Messages are retained for a configurable period (for example, 30, 90, or 365 days).

- Deletion: After the retention period, data moves to the SubstrateHolds folder and then is permanently deleted unless subject to a legal hold or other retention policy.

- eDiscovery: You can search all stored data by using Microsoft Purview eDiscovery tools.