Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Azure DevOps Services | Azure DevOps Server 2022 - Azure DevOps Server 2019

Azure Pipelines can automatically build and validate every pull request and commit to your Azure Repos Git repository.

Choose a repository to build

You create a new pipeline by first selecting a repository and then a YAML file in that repository. The repository in which the YAML file is present is called self repository. By default, this is the repository that your pipeline builds.

You can later configure your pipeline to check out a different repository or multiple repositories. To learn how to do this, see multi-repo checkout.

Azure Pipelines must be granted access to your repositories to trigger their builds and fetch their code during builds. Normally, a pipeline has access to repositories in the same project. But, if you wish to access repositories in a different project, then you need to update the permissions granted to job access tokens.

CI triggers

Continuous integration (CI) triggers cause a pipeline to run whenever you push an update to the specified branches or you push specified tags.

YAML pipelines are configured by default with a CI trigger on all branches, unless the Disable implied YAML CI trigger setting, introduced in Azure DevOps sprint 227, is enabled. The Disable implied YAML CI trigger setting can be configured at the organization level or at the project level. When the Disable implied YAML CI trigger setting is enabled, CI triggers for YAML pipelines are not enabled if the YAML pipeline doesn't have a trigger section. By default, Disable implied YAML CI trigger is not enabled.

Branches

You can control which branches get CI triggers with a simple syntax:

trigger:

- main

- releases/*

You can specify the full name of the branch (for example, main) or a wildcard (for example, releases/*).

See Wildcards for information on the wildcard syntax.

Note

You cannot use variables in triggers, as variables are evaluated at runtime (after the trigger has fired).

Note

If you use templates to author YAML files, then you can only specify triggers in the main YAML file for the pipeline. You cannot specify triggers in the template files.

For more complex triggers that use exclude or batch, you must use the full syntax as shown in the following example.

# specific branch build

trigger:

branches:

include:

- main

- releases/*

exclude:

- releases/old*

In the above example, the pipeline will be triggered if a change is pushed to main or to any releases branch. However, it won't be triggered if a change is made to a releases branch that starts with old.

If you specify an exclude clause without an include clause, then it is equivalent to specifying * in the include clause.

In addition to specifying branch names in the branches lists, you can also configure triggers based on tags by using the following format:

trigger:

branches:

include:

- refs/tags/{tagname}

exclude:

- refs/tags/{othertagname}

If you didn't specify any triggers, and the Disable implied YAML CI trigger setting is not enabled, the default is as if you wrote:

trigger:

branches:

include:

- '*' # must quote since "*" is a YAML reserved character; we want a string

Important

When you specify a trigger, it replaces the default implicit trigger, and only pushes to branches that are explicitly configured to be included will trigger a pipeline. Includes are processed first, and then excludes are removed from that list.

Batching CI runs

If you have many team members uploading changes often, you may want to reduce the number of runs you start.

If you set batch to true, when a pipeline is running, the system waits until the run is completed, then starts another run with all changes that have not yet been built.

# specific branch build with batching

trigger:

batch: true

branches:

include:

- main

Note

batch is not supported in repository resource triggers.

To clarify this example, let us say that a push A to main caused the above pipeline to run. While that pipeline is running, additional pushes B and C occur into the repository. These updates do not start new independent runs immediately. But after the first run is completed, all pushes until that point of time are batched together and a new run is started.

Note

If the pipeline has multiple jobs and stages, then the first run should still reach a terminal state by completing or skipping all its jobs and stages before the second run can start. For this reason, you must exercise caution when using this feature in a pipeline with multiple stages or approvals. If you wish to batch your builds in such cases, it is recommended that you split your CI/CD process into two pipelines - one for build (with batching) and one for deployments.

Paths

You can specify file paths to include or exclude.

# specific path build

trigger:

branches:

include:

- main

- releases/*

paths:

include:

- docs

exclude:

- docs/README.md

When you specify paths, you must explicitly specify branches to trigger on if you are using Azure DevOps Server 2019.1 or lower. You can't trigger a pipeline with only a path filter; you must also have a branch filter, and the changed files that match the path filter must be from a branch that matches the branch filter. If you are using Azure DevOps Server 2020 or newer, you can omit branches to filter on all branches in conjunction with the path filter.

Wildcards are supported for path filters. For instance, you can include all paths that match src/app/**/myapp*. You can use wildcard characters (**, *, or ?) when specifying path filters.

- Paths are always specified relative to the root of the repository.

- If you don't set path filters, then the root folder of the repo is implicitly included by default.

- If you exclude a path, you cannot also include it unless you qualify it to a deeper folder. For example if you exclude /tools then you could include /tools/trigger-runs-on-these

- The order of path filters doesn't matter.

- Paths in Git are case-sensitive. Be sure to use the same case as the real folders.

- You cannot use variables in paths, as variables are evaluated at runtime (after the trigger has fired).

Tags

In addition to specifying tags in the branches lists as covered in the previous section, you can directly specify tags to include or exclude:

# specific tag

trigger:

tags:

include:

- v2.*

exclude:

- v2.0

If you don't specify any tag triggers, then by default, tags will not trigger pipelines.

Important

If you specify tags in combination with branch filters, the trigger will fire if either the branch filter is satisfied or the tag filter is satisfied. For example, if a pushed tag satisfies the branch filter, the pipeline triggers even if the tag is excluded by the tag filter, because the push satisfied the branch filter.

Opting out of CI

Disabling the CI trigger

You can opt out of CI triggers entirely by specifying trigger: none.

# A pipeline with no CI trigger

trigger: none

Important

When you push a change to a branch, the YAML file in that branch is evaluated to determine if a CI run should be started.

Skipping CI for individual pushes

You can also tell Azure Pipelines to skip running a pipeline that a push would normally trigger. Just include [skip ci] in the message or description of any of the commits that are part of a push, and Azure Pipelines will skip running CI for this push. You can also use any of the following variations.

[skip ci]or[ci skip]skip-checks: trueorskip-checks:true[skip azurepipelines]or[azurepipelines skip][skip azpipelines]or[azpipelines skip][skip azp]or[azp skip]***NO_CI***

Using the trigger type in conditions

It is a common scenario to run different steps, jobs, or stages in your pipeline depending on the type of trigger that started the run. You can do this using the system variable Build.Reason. For example, add the following condition to your step, job, or stage to exclude it from PR validations.

condition: and(succeeded(), ne(variables['Build.Reason'], 'PullRequest'))

Behavior of triggers when new branches are pushed

It is common to configure multiple pipelines for the same repository. For instance, you may have one pipeline to build the docs for your app and another to build the source code. You may configure CI triggers with appropriate branch filters and path filters in each of these pipelines. For instance, you may want one pipeline to trigger when you push an update to the docs folder, and another one to trigger when you push an update to your application code. In these cases, you need to understand how the pipelines are triggered when a new branch is created.

Here is the behavior when you push a new branch (that matches the branch filters) to your repository:

- If your pipeline has path filters, it will be triggered only if the new branch has changes to files that match that path filter.

- If your pipeline does not have path filters, it will be triggered even if there are no changes in the new branch.

Wildcards

When specifying a branch, tag, or path, you may use an exact name or a wildcard.

Wildcards patterns allow * to match zero or more characters and ? to match a single character.

- If you start your pattern with

*in a YAML pipeline, you must wrap the pattern in quotes, like"*-releases". - For branches and tags:

- A wildcard may appear anywhere in the pattern.

- For paths:

- In Azure DevOps Server 2022 and higher, including Azure DevOps Services, a wildcard may appear anywhere within a path pattern and you may use

*or?. - In Azure DevOps Server 2020 and lower, you may include

*as the final character, but it doesn't do anything differently from specifying the directory name by itself. You may not include*in the middle of a path filter, and you may not use?.

- In Azure DevOps Server 2022 and higher, including Azure DevOps Services, a wildcard may appear anywhere within a path pattern and you may use

trigger:

branches:

include:

- main

- releases/*

- feature/*

exclude:

- releases/old*

- feature/*-working

paths:

include:

- docs/*.md

PR triggers

Pull request (PR) triggers cause a pipeline to run whenever you open a pull request, or when you push changes to it. In Azure Repos Git, this functionality is implemented using branch policies. To enable PR validation, navigate to the branch policies for the desired branch, and configure the Build validation policy for that branch. For more information, see Configure branch policies.

If you have an open PR and you push changes to its source branch, multiple pipelines may run:

- The pipelines specified by the target branch's build validation policy will run on the merge commit (the merged code between the source and target branches of the pull request), regardless if there exist pushed commits whose messages or descriptions contain

[skip ci](or any of its variants). - The pipelines triggered by changes to the PR's source branch, if there are no pushed commits whose messages or descriptions contain

[skip ci](or any of its variants). If at least one pushed commit contains[skip ci], the pipelines will not run.

Finally, after you merge the PR, Azure Pipelines will run the CI pipelines triggered by pushes to the target branch, even if some of the merged commits' messages or descriptions contain [skip ci] (or any of its variants).

Note

To configure validation builds for an Azure Repos Git repository, you must be a project administrator of its project.

Note

Draft pull requests do not trigger a pipeline even if you configure a branch policy.

Validate contributions from forks

Building pull requests from Azure Repos forks is no different from building pull requests within the same repository or project. You can create forks only within the same organization that your project is part of.

Limit job authorization scope

Azure Pipelines provides several security settings to configure the job authorization scope that your pipelines run with.

Limit job authorization scope to current project

Azure Pipelines provides two Limit job authorization scope to current project settings:

- Limit job authorization scope to current project for non-release pipelines - This setting applies to YAML pipelines and classic build pipelines. This setting does not apply to classic release pipelines.

- Limit job authorization scope to current project for release pipelines - This setting applies to classic release pipelines only.

Pipelines run with collection scoped access tokens unless the relevant setting for the pipeline type is enabled. The Limit job authorization scope settings allow you to reduce the scope of access for all pipelines to the current project. This can impact your pipeline if you are accessing an Azure Repos Git repository in a different project in your organization.

If your Azure Repos Git repository is in a different project than your pipeline, and the Limit job authorization scope setting for your pipeline type is enabled, you must grant permission to the build service identity for your pipeline to the second project. For more information, see Manage build service account permissions.

Azure Pipelines provides a security setting to configure the job authorization scope that your pipelines run with.

- Limit job authorization scope to current project - This setting applies to YAML pipelines and classic build pipelines. This setting does not apply to classic release pipelines.

Pipelines run with collection scoped access tokens unless Limit job authorization scope to current project is enabled. This setting allows you to reduce the scope of access for all pipelines to the current project. This can impact your pipeline if you are accessing an Azure Repos Git repository in a different project in your organization.

If your Azure Repos Git repository is in a different project than your pipeline, and the Limit job authorization scope setting is enabled, you must grant permission to the build service identity for your pipeline to the second project. For more information, see Job authorization scope.

For more information on Limit job authorization scope, see Understand job access tokens.

Limit job authorization scope to referenced Azure DevOps repositories

Pipelines can access any Azure DevOps repositories in authorized projects, as described in the previous Limit job authorization scope to current project section, unless Limit job authorization scope to referenced Azure DevOps repositories is enabled. With this option enabled, you can reduce the scope of access for all pipelines to only Azure DevOps repositories explicitly referenced by a checkout step or a uses statement in the pipeline job that uses that repository.

To configure this setting, navigate to Pipelines, Settings at either Organization settings or Project settings. If enabled at the organization level, the setting is grayed out and unavailable at the project settings level.

When Limit job authorization scope to referenced Azure DevOps repositories is enabled, your YAML pipelines must explicitly reference any Azure Repos Git repositories you want to use in the pipeline as a checkout step in the job that uses the repository. You won't be able to fetch code using scripting tasks and git commands for an Azure Repos Git repository unless that repo is first explicitly referenced.

There are a few exceptions where you don't need to explicitly reference an Azure Repos Git repository before using it in your pipeline when Limit job authorization scope to referenced Azure DevOps repositories is enabled.

- If you do not have an explicit checkout step in your pipeline, it is as if you have a

checkout: selfstep, and theselfrepository is checked out. - If you are using a script to perform read-only operations on a repository in a public project, you don't need to reference the public project repository in a

checkoutstep. - If you are using a script that provides its own authentication to the repo, such as a PAT, you don't need to reference that repository in a

checkoutstep.

For example, when Limit job authorization scope to referenced Azure DevOps repositories is enabled, if your pipeline is in the FabrikamProject/Fabrikam repo in your organization, and you want to use a script to check out the FabrikamProject/FabrikamTools repo, you must either reference this repository in a checkout step or with a uses statement.

If you are already checking out the FabrikamTools repository in your pipeline using a checkout step, you may subsequently use scripts to interact with that repository.

steps:

- checkout: git://FabrikamFiber/FabrikamTools # Azure Repos Git repository in the same organization

- script: # Do something with that repo

# Or you can reference it with a uses statement in the job

uses:

repositories: # List of referenced repositories

- FabrikamTools # Repository reference to FabrikamTools

steps:

- script: # Do something with that repo like clone it

Note

For many scenarios, multi-repo checkout can be leveraged, removing the need to use scripts to check out additional repositories in your pipeline. For more information, see Check out multiple repositories in your pipeline.

Protect access to repositories in YAML pipelines

Pipelines can access any Azure DevOps repositories in authorized projects, as described in the previous Limit job authorization scope to current project section, unless Protect access to repositories in YAML pipelines is enabled. With this option enabled, you can reduce the scope of access for all pipelines to only Azure DevOps repositories explicitly referenced by a checkout step or a uses statement in the pipeline job that uses that repository.

To configure this setting, navigate to Pipelines, Settings at either Organization settings or Project settings. If enabled at the organization level, the setting is grayed out and unavailable at the project settings level.

Important

Protect access to repositories in YAML pipelines is enabled by default for new organizations and projects created after May 2020. When Protect access to repositories in YAML pipelines is enabled, your YAML pipelines must explicitly reference any Azure Repos Git repositories you want to use in the pipeline as a checkout step in the job that uses the repository. You won't be able to fetch code using scripting tasks and git commands for an Azure Repos Git repository unless that repo is first explicitly referenced.

There are a few exceptions where you don't need to explicitly reference an Azure Repos Git repository before using it in your pipeline when Protect access to repositories in YAML pipelines is enabled.

- If you do not have an explicit checkout step in your pipeline, it is as if you have a

checkout: selfstep, and theselfrepository is checked out. - If you are using a script to perform read-only operations on a repository in a public project, you don't need to reference the public project repository in a

checkoutstep. - If you are using a script that provides its own authentication to the repo, such as a PAT, you don't need to reference that repository in a

checkoutstep.

For example, when Protect access to repositories in YAML pipelines is enabled, if your pipeline is in the FabrikamProject/Fabrikam repo in your organization, and you want to use a script to check out the FabrikamProject/FabrikamTools repo, you must either reference this repository in a checkout step or with a uses statement.

If you are already checking out the FabrikamTools repository in your pipeline using a checkout step, you may subsequently use scripts to interact with that repository.

steps:

- checkout: git://FabrikamFiber/FabrikamTools # Azure Repos Git repository in the same organization

- script: # Do something with that repo

# Or you can reference it with a uses statement in the job

uses:

repositories: # List of referenced repositories

- FabrikamTools # Repository reference to FabrikamTools

steps:

- script: # Do something with that repo like clone it

Note

For many scenarios, multi-repo checkout can be leveraged, removing the need to use scripts to check out additional repositories in your pipeline. For more information, see Check out multiple repositories in your pipeline.

Checkout

When a pipeline is triggered, Azure Pipelines pulls your source code from the Azure Repos Git repository. You can control various aspects of how your code is checked out.

Note

When you include a checkout step in your pipeline, we run the following command: git -c fetch --force --tags --prune --prune-tags --progress --no-recurse-submodules origin --depth=1.

If this default doesn't meet your needs, you can choose to exclude built-in checkout by checkout: none and then use a script task to perform your own checkout.

Preferred version of Git

The Windows agent comes with its own copy of Git.

If you prefer to supply your own Git rather than use the included copy, set System.PreferGitFromPath to true.

The System.PreferGitFromPath setting is always true on non-Windows agents.

Checkout path

If you're checking out a single repository, by default, your source code is checked out into a directory called s. For YAML pipelines, you can change this by specifying checkout with a path. The specified path is relative to $(Agent.BuildDirectory). For example: if the checkout path value is mycustompath and $(Agent.BuildDirectory) is C:\agent\_work\1, then the source code is checked out into C:\agent\_work\1\mycustompath.

If you're using multiple checkout steps and checking out multiple repositories, and not explicitly specifying the folder using path, each repository is placed in a subfolder of s named after the repository. For example if you check out two repositories named tools and code, the source code is checked out into C:\agent\_work\1\s\tools and C:\agent\_work\1\s\code.

Please note that the checkout path value can't be set to go up any directory levels above $(Agent.BuildDirectory), so path\..\anotherpath will result in a valid checkout path (i.e. C:\agent\_work\1\anotherpath), but a value like ..\invalidpath will not (i.e. C:\agent\_work\invalidpath).

You can configure the path setting in the Checkout step of your pipeline.

steps:

- checkout: self # self represents the repo where the initial Pipelines YAML file was found

clean: boolean # whether to fetch clean each time

fetchDepth: number # the depth of commits to ask Git to fetch

lfs: boolean # whether to download Git-LFS files

submodules: true | recursive # set to 'true' for a single level of submodules or 'recursive' to get submodules of submodules

path: string # path to check out source code, relative to the agent's build directory (e.g. \_work\1)

persistCredentials: boolean # set to 'true' to leave the OAuth token in the Git config after the initial fetch

Submodules

You can configure the submodules setting in the Checkout step of your pipeline if you want to download files from submodules.

steps:

- checkout: self # self represents the repo where the initial Pipelines YAML file was found

clean: boolean # whether to fetch clean each time

fetchDepth: number # the depth of commits to ask Git to fetch

lfs: boolean # whether to download Git-LFS files

submodules: true | recursive # set to 'true' for a single level of submodules or 'recursive' to get submodules of submodules

path: string # path to check out source code, relative to the agent's build directory (e.g. \_work\1)

persistCredentials: boolean # set to 'true' to leave the OAuth token in the Git config after the initial fetch

The build pipeline will check out your Git submodules as long as they are:

Unauthenticated: A public, unauthenticated repo with no credentials required to clone or fetch.

Authenticated:

Contained in the same project as the Azure Repos Git repo specified above. The same credentials that are used by the agent to get the sources from the main repository are also used to get the sources for submodules.

Added by using a URL relative to the main repository. For example

This one would be checked out:

git submodule add ../../../FabrikamFiberProject/_git/FabrikamFiber FabrikamFiberIn this example the submodule refers to a repo (FabrikamFiber) in the same Azure DevOps organization, but in a different project (FabrikamFiberProject). The same credentials that are used by the agent to get the sources from the main repository are also used to get the sources for submodules. This requires that the job access token has access to the repository in the second project. If you restricted the job access token as explained in the section above, then you won't be able to do this. You can allow the job access token to access the repo in the second project by either (a) explicitly granting access to the project build service account in the second project or (b) using collection-scoped access tokens instead of project-scoped tokens for the entire organization. For more information about these options and their security implications, see Access repositories, artifacts, and other resources.

This one would not be checked out:

git submodule add https://[email protected]/fabrikam-fiber/FabrikamFiberProject/_git/FabrikamFiber FabrikamFiber

Alternative to using the Checkout submodules option

In some cases you can't use the Checkout submodules option. You might have a scenario where a different set of credentials are needed to access the submodules. This can happen, for example, if your main repository and submodule repositories aren't stored in the same Azure DevOps organization, or if your job access token doesn't have access to the repository in a different project.

If you can't use the Checkout submodules option, then you can instead use a custom script step to fetch submodules.

First, get a personal access token (PAT) and prefix it with pat:.

Next, base64-encode this prefixed string to create a basic auth token.

Finally, add this script to your pipeline:

git -c http.https://<url of submodule repository>.extraheader="AUTHORIZATION: Basic <BASE64_ENCODED_STRING>" submodule update --init --recursive

Be sure to replace "<BASE64_ENCODED_STRING>" with your Base64-encoded "pat:token" string.

Use a secret variable in your project or build pipeline to store the basic auth token that you generated. Use that variable to populate the secret in the above Git command.

Note

Q: Why can't I use a Git credential manager on the agent? A: Storing the submodule credentials in a Git credential manager installed on your private build agent is usually not effective as the credential manager may prompt you to re-enter the credentials whenever the submodule is updated. This isn't desirable during automated builds when user interaction isn't possible.

Sync tags

Important

The sync tags feature is supported in Azure Repos Git with Azure DevOps Server 2022.1 and higher.

The checkout step uses the --tags option when fetching the contents of a Git repository. This causes the server to fetch all tags as well as all objects that are pointed to by those tags. This increases the time to run the task in a pipeline, particularly if you have a large repository with a number of tags. Furthermore, the checkout step syncs tags even when you enable the shallow fetch option, thereby possibly defeating its purpose. To reduce the amount of data fetched or pulled from a Git repository, Microsoft has added a new option to check out to control the behavior of syncing tags. This option is available both in classic and YAML pipelines.

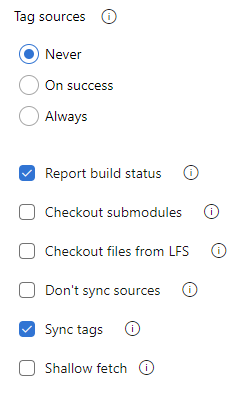

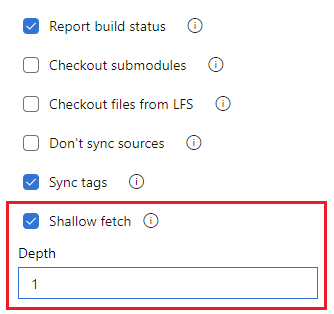

Whether to synchronize tags when checking out a repository can be configured in YAML by setting the fetchTags property, and in the UI by configuring the Sync tags setting.

You can configure the fetchTags setting in the Checkout step of your pipeline.

To configure the setting in YAML, set the fetchTags property.

steps:

- checkout: self

fetchTags: true

You can also configure this setting by using the Sync tags option in the pipeline settings UI.

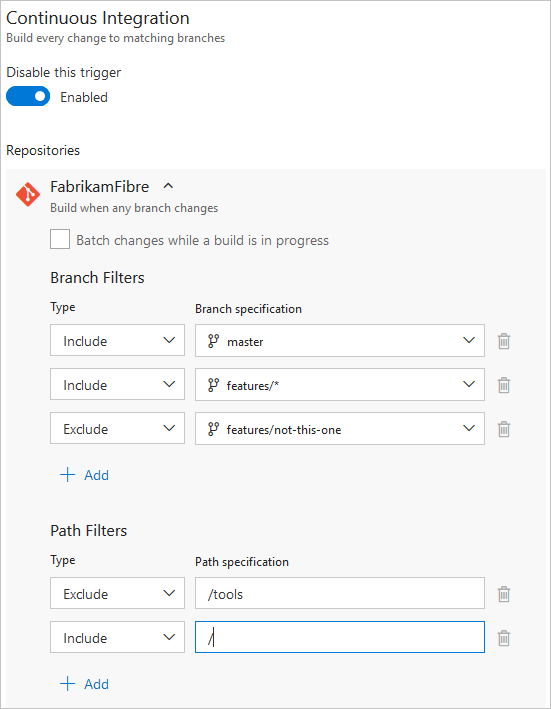

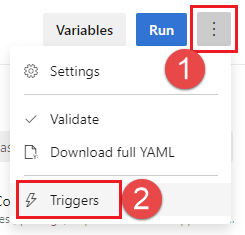

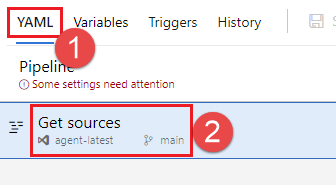

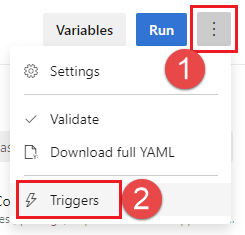

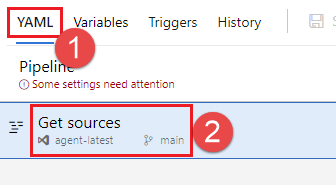

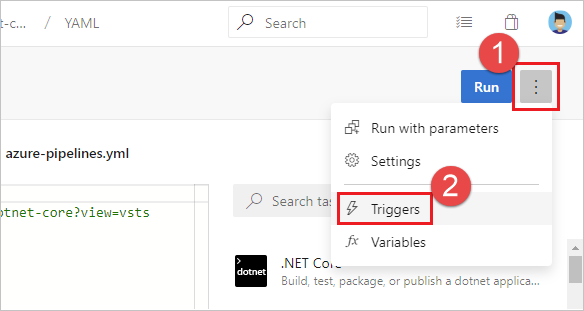

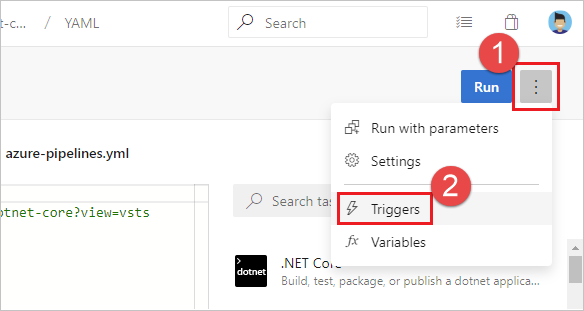

Edit your YAML pipeline and choose More actions, Triggers.

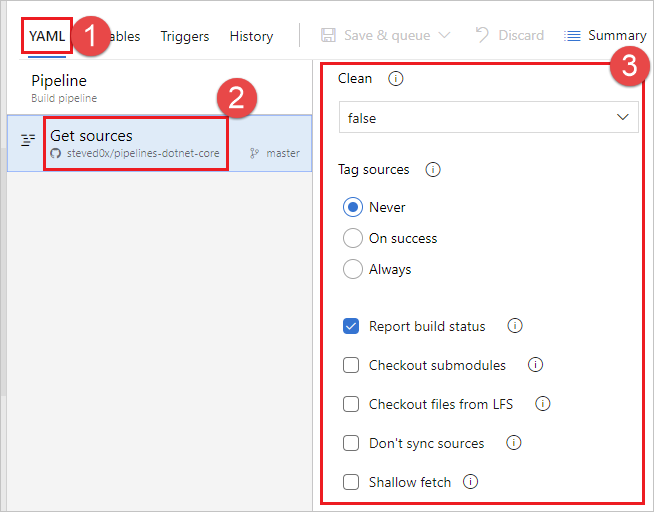

Choose YAML,

Get sources.

Configure the Sync tags setting.

Note

If you explicitly set fetchTags in your checkout step, that setting takes priority over the setting configured in the pipeline settings UI.

Default behavior

- For existing pipelines created before the release of Azure DevOps sprint 209, released in September 2022, the default for syncing tags remains the same as the existing behavior before the Sync tags options was added, which is

true. - For new pipelines created after Azure DevOps sprint release 209, the default for syncing tags is

false.

Note

If you explicitly set fetchTags in your checkout step, that setting takes priority over the setting configured in the pipeline settings UI.

Shallow fetch

You may want to limit how far back in history to download. Effectively this results in git fetch --depth=n. If your repository is large, this option might make your build pipeline more efficient. Your repository might be large if it has been in use for a long time and has sizeable history. It also might be large if you added and later deleted large files.

Note

In some organizations, new pipelines created after the September 2022 Azure DevOps sprint 209 update have Shallow fetch enabled by default and configured with a depth of 1. Previously the default wasn't to shallow fetch.

To check your pipeline, view the Shallow fetch setting in the pipeline settings UI as described in the following section.

You can configure the fetchDepth setting in the Checkout step of your pipeline.

steps:

- checkout: self # self represents the repo where the initial Pipelines YAML file was found

clean: boolean # whether to fetch clean each time

fetchDepth: number # the depth of commits to ask Git to fetch

lfs: boolean # whether to download Git-LFS files

submodules: true | recursive # set to 'true' for a single level of submodules or 'recursive' to get submodules of submodules

path: string # path to check out source code, relative to the agent's build directory (e.g. \_work\1)

persistCredentials: boolean # set to 'true' to leave the OAuth token in the Git config after the initial fetch

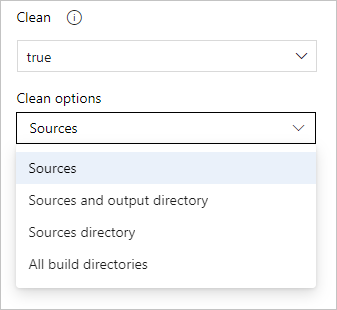

You can also configure fetch depth by setting the Shallow depth option in the pipeline settings UI.

Edit your YAML pipeline and choose More actions, Triggers.

Choose YAML,

Get sources.

Configure the Shallow fetch setting. Uncheck Shallow fetch to disable shallow fetch, or check the box and enter a Depth to enable shallow fetch.

Note

If you explicitly set fetchDepth in your checkout step, that setting takes priority over the setting configured in the pipeline settings UI. Setting fetchDepth: 0 fetches all history and overrides the Shallow fetch setting.

In these cases this option can help you conserve network and storage resources. It might also save time. The reason it doesn't always save time is because in some situations the server might need to spend time calculating the commits to download for the depth you specify.

Note

When the pipeline is started, the branch to build is resolved to a commit ID. Then, the agent fetches the branch and checks out the desired commit. There is a small window between when a branch is resolved to a commit ID and when the agent performs the checkout. If the branch updates rapidly and you set a very small value for shallow fetch, the commit may not exist when the agent attempts to check it out. If that happens, increase the shallow fetch depth setting.

Don't sync sources

You may want to skip fetching new commits. This option can be useful in cases when you want to:

Git init, config, and fetch using your own custom options.

Use a build pipeline to just run automation (for example some scripts) that do not depend on code in version control.

You can configure the Don't sync sources setting in the Checkout step of your pipeline, by setting checkout: none.

steps:

- checkout: none # Don't sync sources

Note

When you use this option, the agent also skips running Git commands that clean the repo.

Clean build

You can perform different forms of cleaning the working directory of your self-hosted agent before a build runs.

In general, for faster performance of your self-hosted agents, don't clean the repo. In this case, to get the best performance, make sure you're also building incrementally by disabling any Clean option of the task or tool you're using to build.

If you do need to clean the repo (for example to avoid problems caused by residual files from a previous build), your options are below.

Note

Cleaning isn't effective if you're using a Microsoft-hosted agent because you'll get a new agent every time.

You can configure the clean setting in the Checkout step of your pipeline.

steps:

- checkout: self # self represents the repo where the initial Pipelines YAML file was found

clean: boolean # whether to fetch clean each time

fetchDepth: number # the depth of commits to ask Git to fetch

lfs: boolean # whether to download Git-LFS files

submodules: true | recursive # set to 'true' for a single level of submodules or 'recursive' to get submodules of submodules

path: string # path to check out source code, relative to the agent's build directory (e.g. \_work\1)

persistCredentials: boolean # set to 'true' to leave the OAuth token in the Git config after the initial fetch

When clean is set to true the build pipeline performs an undo of any changes in $(Build.SourcesDirectory). More specifically, the following Git commands are executed prior to fetching the source.

git clean -ffdx

git reset --hard HEAD

For more options, you can configure the workspace setting of a Job.

jobs:

- job: string # name of the job, A-Z, a-z, 0-9, and underscore

...

workspace:

clean: outputs | resources | all # what to clean up before the job runs

This gives the following clean options.

outputs: Same operation as the clean setting described in the previous checkout task, plus: Deletes and recreates

$(Build.BinariesDirectory). Note that the$(Build.ArtifactStagingDirectory)and$(Common.TestResultsDirectory)are always deleted and recreated prior to every build regardless of any of these settings.resources: Deletes and recreates

$(Build.SourcesDirectory). This results in initializing a new, local Git repository for every build.all: Deletes and recreates

$(Agent.BuildDirectory). This results in initializing a new, local Git repository for every build.

Label sources

You may want to label your source code files to enable your team to easily identify which version of each file is included in the completed build. You also have the option to specify whether the source code should be labeled for all builds or only for successful builds.

You can't currently configure this setting in YAML but you can in the classic editor. When editing a YAML pipeline, you can access the classic editor by choosing either Triggers from the YAML editor menu.

From the classic editor, choose YAML, choose the Get sources task, and then configure the desired properties there.

In the Tag format you can use user-defined and predefined variables that have a scope of "All." For example:

$(Build.DefinitionName)_$(Build.DefinitionVersion)_$(Build.BuildId)_$(Build.BuildNumber)_$(My.Variable)

The first four variables are predefined. My.Variable can be defined by you on the variables tab.

The pipeline labels your sources with a Git tag.

Some build variables might yield a value that isn't a valid label. For example, variables such as $(Build.RequestedFor) and $(Build.DefinitionName) can contain white space. If the value contains white space, the tag isn't created.

After the sources are tagged by your build pipeline, an artifact with the Git ref refs/tags/{tag} is automatically added to the completed build. This gives your team additional traceability and a more user-friendly way to navigate from the build to the code that was built. The tag is considered a build artifact since it is produced by the build. When the build is deleted either manually or through a retention policy, the tag is also deleted.

FAQ

Problems related to Azure Repos integration fall into three categories:

- Failing triggers: My pipeline is not being triggered when I push an update to the repo.

- Failing checkout: My pipeline is being triggered, but it fails in the checkout step.

- Wrong version: My pipeline runs, but it is using an unexpected version of the source/YAML.

Failing triggers

I just created a new YAML pipeline with CI/PR triggers, but the pipeline isn't being triggered.

Follow each of these steps to troubleshoot your failing triggers:

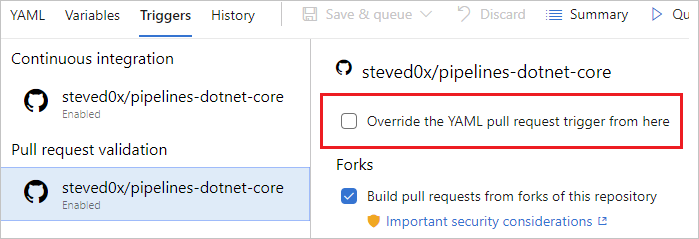

Are your YAML CI or PR triggers overridden by pipeline settings in the UI? While editing your pipeline, choose ... and then Triggers.

Check the Override the YAML trigger from here setting for the types of trigger (Continuous integration or Pull request validation) available for your repo.

- Are you configuring the PR trigger in the YAML file or in branch policies for the repo? For an Azure Repos Git repo, you cannot configure a PR trigger in the YAML file. You need to use branch policies.

Is your pipeline paused or disabled? Open the editor for the pipeline, and then select Settings to check. If your pipeline is paused or disabled, then triggers do not work.

Have you updated the YAML file in the correct branch? If you push an update to a branch, then the YAML file in that same branch governs the CI behavior. If you push an update to a source branch, then the YAML file resulting from merging the source branch with the target branch governs the PR behavior. Make sure that the YAML file in the correct branch has the necessary CI or PR configuration.

Have you configured the trigger correctly? When you define a YAML trigger, you can specify both include and exclude clauses for branches, tags, and paths. Ensure that the include clause matches the details of your commit and that the exclude clause doesn't exclude them. Check the syntax for the triggers and make sure that it is accurate.

Have you used variables in defining the trigger or the paths? That is not supported.

Did you use templates for your YAML file? If so, make sure that your triggers are defined in the main YAML file. Triggers defined inside template files are not supported.

Have you excluded the branches or paths to which you pushed your changes? Test by pushing a change to an included path in an included branch. Note that paths in triggers are case-sensitive. Make sure that you use the same case as those of real folders when specifying the paths in triggers.

Did you just push a new branch? If so, the new branch may not start a new run. See the section "Behavior of triggers when new branches are created".

My CI or PR triggers have been working fine. But, they stopped working now.

First go through the troubleshooting steps in the previous question. Then, follow these additional steps:

Do you have merge conflicts in your PR? For a PR that did not trigger a pipeline, open it and check whether it has a merge conflict. Resolve the merge conflict.

Are you experiencing a delay in the processing of push or PR events? You can usually verify this by seeing if the issue is specific to a single pipeline or is common to all pipelines or repos in your project. If a push or a PR update to any of the repos exhibits this symptom, we might be experiencing delays in processing the update events. Check if we are experiencing a service outage on our status page. If the status page shows an issue, then our team must have already started working on it. Check the page frequently for updates on the issue.

I do not want users to override the list of branches for triggers when they update the YAML file. How can I do this?

Users with permissions to contribute code can update the YAML file and include/exclude additional branches. As a result, users can include their own feature or user branch in their YAML file and push that update to a feature or user branch. This may cause the pipeline to be triggered for all updates to that branch. If you want to prevent this behavior, then you can:

- Edit the pipeline in the Azure Pipelines UI.

- Navigate to the Triggers menu.

- Select Override the YAML continuous Integration trigger from here.

- Specify the branches to include or exclude for the trigger.

When you follow these steps, any CI triggers specified in the YAML file are ignored.

I have multiple repositories in my YAML pipeline. How do I set up triggers for each repository?

See triggers in Using multiple repositories.

Failing checkout

I see the following error in the log file during checkout step. How do I fix it?

remote: TF401019: The Git repository with name or identifier XYZ does not exist or you do not have permissions for the operation you are attempting.

fatal: repository 'XYZ' not found

##[error] Git fetch failed with exit code: 128

Follow each of these steps to troubleshoot your failing checkout:

Does the repository still exist? First, make sure it does by opening it in the Repos page.

Are you accessing the repository using a script? If so, check the Limit job authorization scope to referenced Azure DevOps repositories setting. When Limit job authorization scope to referenced Azure DevOps repositories is enabled, you won't be able to check out Azure Repos Git repositories using a script unless they are explicitly referenced first in the pipeline.

What is the job authorization scope of the pipeline?

If the scope is collection:

- This may be an intermittent error. Re-run the pipeline.

- Someone may have removed the access to Project Collection Build Service account.

- Go to Project settings for the project in which the repository exists. Select Repos > Repositories > specific repository, and then Security.

- Check if Project Collection Build Service (your-collection-name) exists in the list of users.

- Check if that account has Create tag and Read access.

If the scope is project:

- Is the repo in the same project as the pipeline?

- Yes:

- This may be an intermittent error. Re-run the pipeline.

- Someone may have removed the access to Project Build Service account.

- Go to Project settings for the project in which the repository exists. Select Repos > Repositories > specific repository, and then Security.

- Check if your-project-name Build Service (your-collection-name) exists in the list of users.

- Check if that account has Create tag and Read access.

- No:

- Is your pipeline in a public project?

- Yes: You cannot access resources outside of your public project. Make the project private.

- No: You need to configure permissions to access another repo in the same project collection.

- Is your pipeline in a public project?

- Yes:

- Is the repo in the same project as the pipeline?

Wrong version

A wrong version of the YAML file is being used in the pipeline. Why is that?

- For CI triggers, the YAML file that is in the branch you are pushing is evaluated to see if a CI build should be run.

- For PR triggers, the YAML file resulting from merging the source and target branches of the PR is evaluated to see if a PR build should be run.