Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Question

Thursday, December 20, 2012 9:40 PM

Hi,

I've been trying to build a WS2012 cluster using a Dell MD3200i (with the 4/12/12 release supported firmware) and am getting the following warnings when running the Storage tests:

Physical disk 468a27fb is visible from only one node and will not be tested. Validation requires that the disk be visible from at least two nodes. The disk is reported as visible at node: vm-node-01.dom.com

Physical disk e4841603 is visible from only one node and will not be tested. Validation requires that the disk be visible from at least two nodes. The disk is reported as visible at node: vm-node-02.dom.com

Physical disk 468a27fb is visible from only one node and will not be tested. Validation requires that the disk be visible from at least two nodes. The disk is reported as visible at node: vm-node-02.dom.com

Physical disk e4841603 is visible from only one node and will not be tested. Validation requires that the disk be visible from at least two nodes. The disk is reported as visible at node: vm-node-01.dom.com

No disks were found on which to perform cluster validation tests. To correct this, review the following possible causes:

* The disks are already clustered and currently Online in the cluster. When testing a working cluster, ensure that the disks that you want to test are Offline in the cluster.

* The disks are unsuitable for clustering. Boot volumes, system volumes, disks used for paging or dump files, etc., are examples of disks unsuitable for clustering.

* Review the "List Disks" test. Ensure that the disks you want to test are unmasked, that is, your masking or zoning does not prevent access to the disks. If the disks seem to be unmasked or zoned correctly but could not be tested, try restarting the servers before running the validation tests again.

* The cluster does not use shared storage. A cluster must use a hardware solution based either on shared storage or on replication between nodes. If your solution is based on replication between nodes, you do not need to rerun Storage tests. Instead, work with the provider of your replication solution to ensure that replicated copies of the cluster configuration database can be maintained across the nodes.

* The disks are Online in the cluster and are in maintenance mode.

No disks were found on which to perform cluster validation tests.

Stop: 20/12/2012 21:32:31.

Checking through the List Disks task, I can see that both nodes can see the iSCSI and it's mounted against the same LUN (output is identical for both nodes):

| 2 | 2 | 468a27fb | 690B11C0000DE74D000005C950D15FE8 | 29T0062 | DELL MD32xxi Multi-Path Disk Device | iSCSI | Stor Port | 2:0:0:10 | Microsoft Multi-Path Bus Driver | True | Disk partition style is MBR. Disk type is BASIC. Disk uses Microsoft Multipath I/O (MPIO). |

| 3 | 3 | e4841603 | 690B11C0000DE7D2000002DA50BF581E | 29T0062 | DELL Universal Xport Multi-Path Disk Device | iSCSI | Stor Port | 2:0:0:31 | Microsoft Multi-Path Bus Driver | True | Disk partition style is MBR. Disk type is BASIC. Disk uses Microsoft Multipath I/O (MPIO). |

Can anyone shed any light on why it would appear to be seeing the disks in one test but not in the other?

All replies (14)

Monday, December 31, 2012 11:12 AM ✅Answered | 1 vote

Sorry Adam, I probably should have said "is your cluster hosted on VM guests". I think from your response your cluster is physical. The reason I was asking wa that there is a storasge validation issue when creating virtual clusters that fails (caused by the vm client tools). The solution is to remove the client tools (vmware tools, vbox additions etc) from the guests whilst you validate and create the cluster. Doesn't sound like this is your issue tho this time.

Couple of points.

- Tim mentioned the multi-volume disk issue. I know you said this is a red herring, but he is right, you should still remove them.

- Have you persisted the iscsi targets?

- Personally I'd remove the targets and re-add them in. Something is obviously not right with them.

- Shared storage is not a prerequisite to Windows Clustering, so there is no problem with you creating the Cluster anyway. The disk issue can still be troubleshooted/ resolved post creation.

Regards,

Mark Broadbent.

Contact me through (twitter|blog|SQLCloud)

Please click "Propose As Answer" if a post solves your problem

or "Vote As Helpful" if a post has been useful to you

Watch my sessions at the PASS Summit 2012

Thursday, December 20, 2012 10:18 PM

Why do you use iSCSI and not direct SAS connection and clustered storage spaces?

Friday, December 21, 2012 5:36 AM | 1 vote

Hi,

The error report lists some possible reasons for such issues:

- The disks are already clustered and currently Online in the cluster

- The disks are unsuitable for clustering

- Review the "List Disks" test. Ensure that the disks you want to test are unmasked, that is, your masking or zoning does not prevent access to the disks.

- The cluster does not use shared storage.

- The disks are Online in the cluster and are in maintenance mode.

Are these two LUNs are new created for the cluster? Are these two LUNs are accessed by other device? You are using iSCSI initiator to connect storage, on a server that you want to include in the failover cluster, click Start, click Administrative Tools, click Computer Management, and then click Disk Management. (If the User Account Control dialog box appears, confirm that the action it displays is what you want, and then click Continue.) In Disk Management, confirm that the cluster disks are visible.

If possible give us some screen capture of your iSCSI initiator and Disk Manager Configuration.

For more information please refer to following MS articles:

Set Up Shared Storage for Servers in the Failover Cluster

http://technet.microsoft.com/en-us/library/cc719005(v=WS.10).aspx

Understanding Cluster Validation Tests: Storage

http://technet.microsoft.com/en-us/library/cc771259.aspx

Understanding Requirements for Failover Clusters

http://technet.microsoft.com/en-us/library/cc771404.aspx

Hope this helps!

If you are TechNet Subscription user and have any feedback on our support quality, please send your feedback here.

Lawrence

TechNet Community Support

Friday, December 21, 2012 10:09 AM

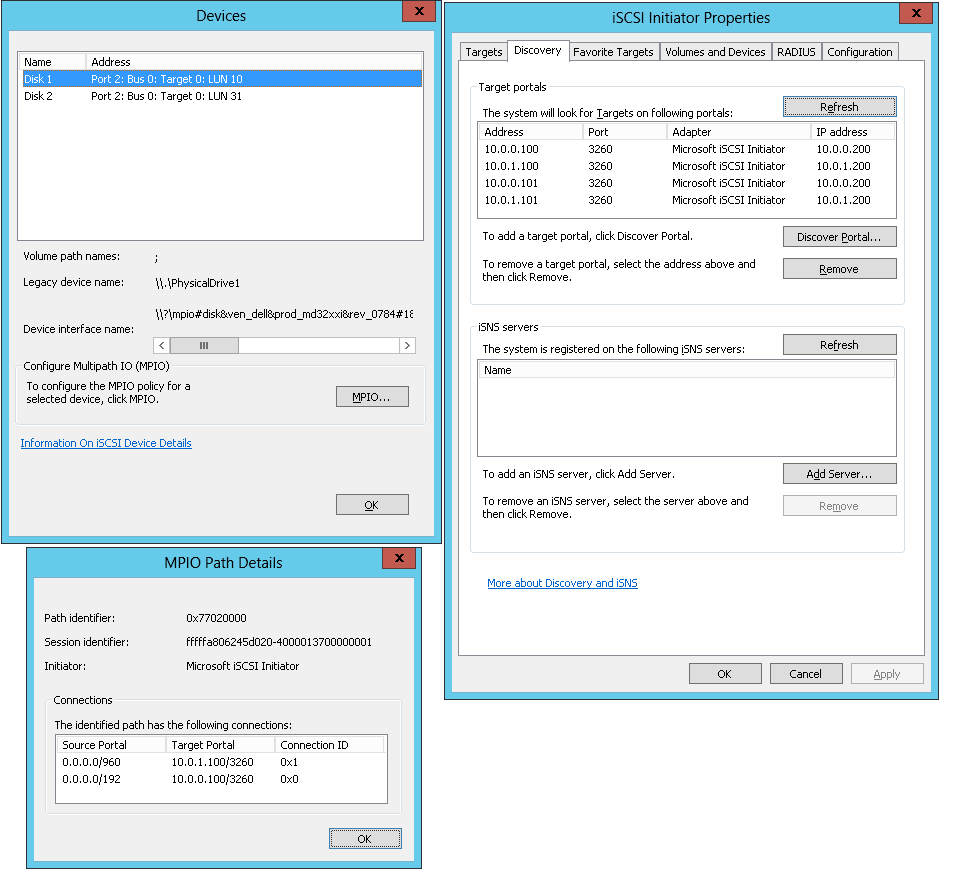

As requested, a bunch of screenshots:

Node1 iSCSI config pages:

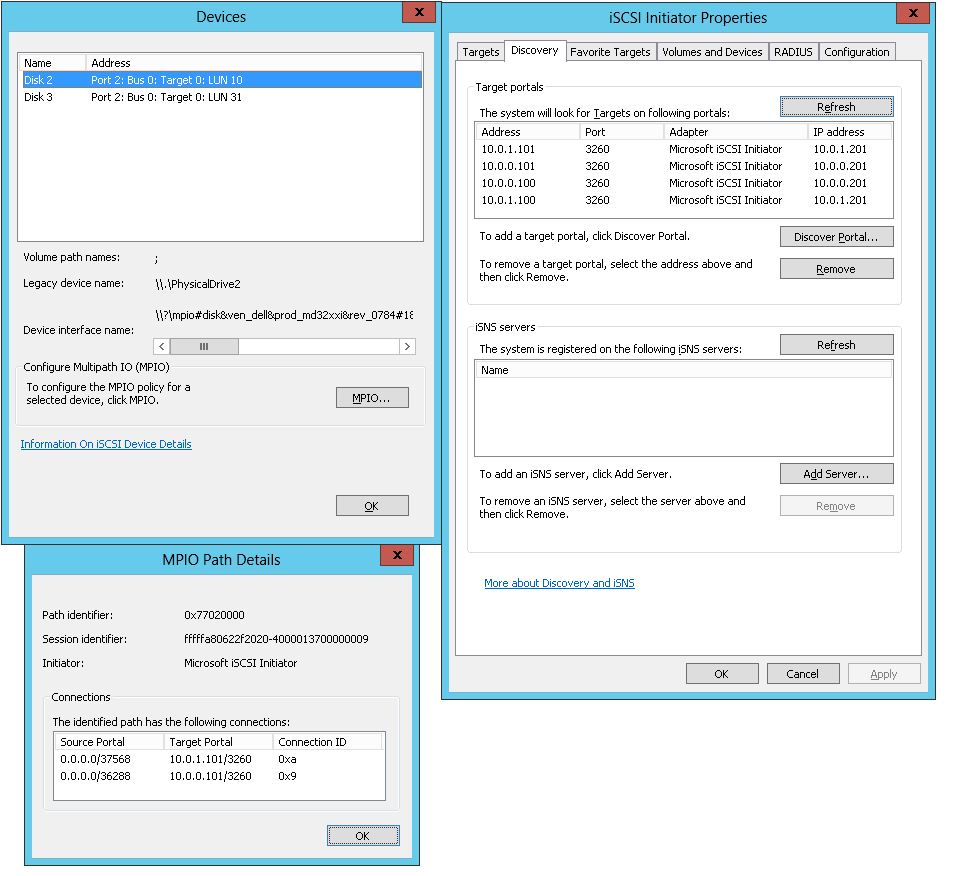

Node2 iSCSI:

Friday, December 21, 2012 10:10 AM

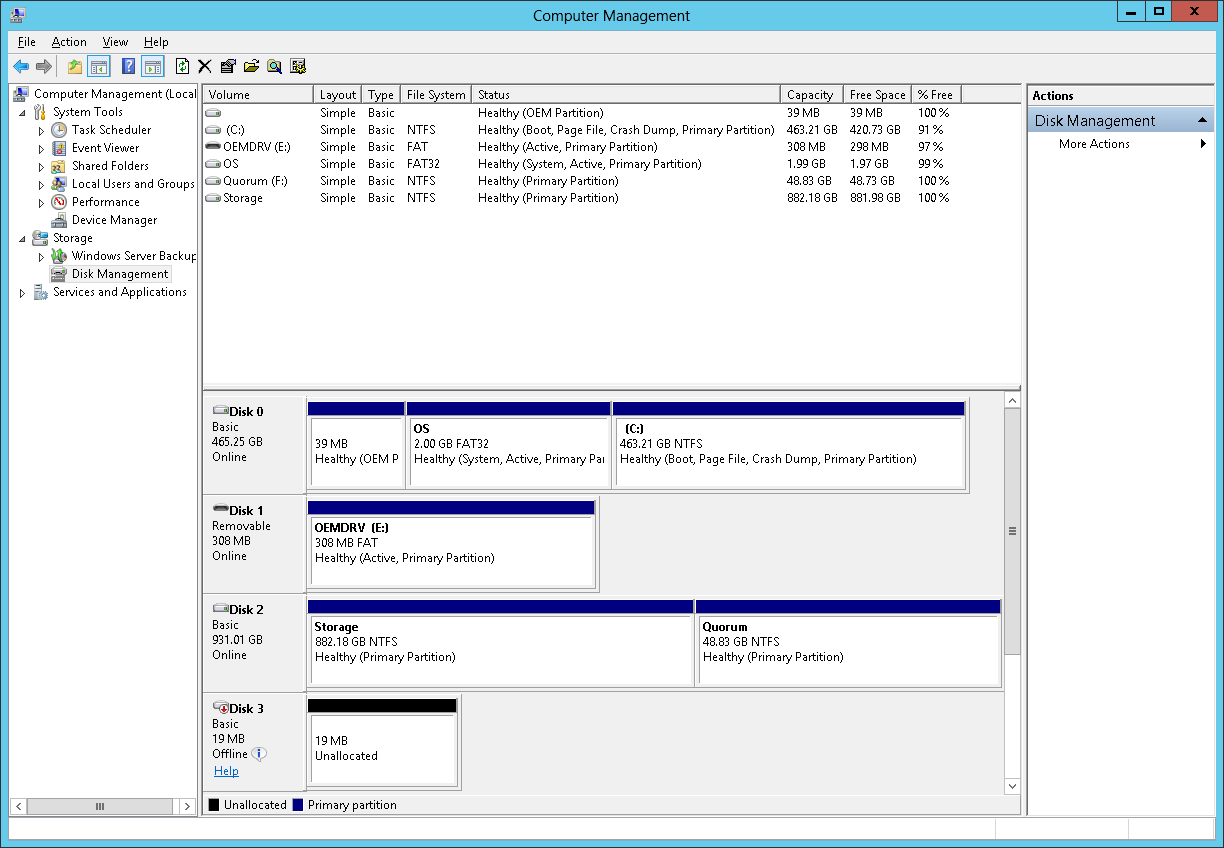

Node1 Disk Mgr:

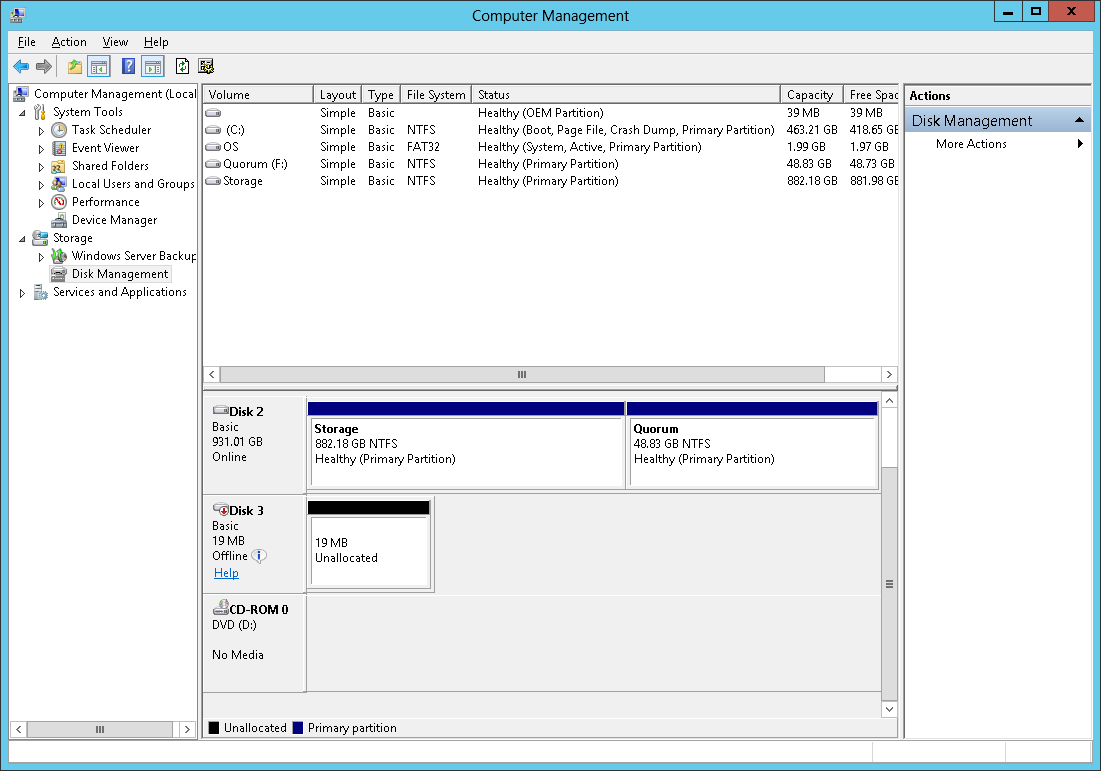

Node 2 Disk Mgr:

In the Disk Mgr shots above the disks are online - however, these warnings appear whether they're offline or not. This is all taking place pre-creation rather than post. I AM given the option to go ahead and create the cluster, even with these disks not being available.

Any advice would be appreciated!

Friday, December 21, 2012 10:33 PM | 1 vote

Disk 3 is not intialized and formatted.

Disk 2 is created with multiple volumes on it. I've never tried that in a cluster, but I don't think that will work.

tim

Monday, December 24, 2012 6:12 AM | 1 vote

Hi,

Tim has pointed out the problems, Disk2 has two volumes: “Storage” (I think is for data usage) and “Quorum” volume.

In your situation you have a 2 node server cluster, I assume your cluster use “Node and Disk Majority” quorum. When use the Node and Disk Majority mode, we recommend following configuration for the disk witness:

- Use a small Logical Unit Number (LUN) that is at least 512 MB in size.

- Choose a basic disk with a single volume.

- Make sure that the LUN is dedicated to the disk witness. It must not contain any other user or application data.

- Choose whether to assign a drive letter to the LUN based on the needs of your cluster. The LUN does not have to have a drive letter (to conserve drive letters for applications).

- As with other LUNs that are to be used by the cluster, you must add the LUN to the set of disks that the cluster can use. For more information, see http://go.microsoft.com/fwlink/?LinkId=114539.

- Make sure that the LUN has been verified with the Validate a Configuration Wizard.

- We recommend that you configure the LUN with hardware RAID for fault tolerance.

- In most situations, do not back up the disk witness or the data on it. Backing up the disk witness can add to the input/output (I/O) activity on the disk and decrease its performance, which could potentially cause it to fail.

- We recommend that you avoid all antivirus scanning on the disk witness.

- Format the LUN with the NTFS file system.

And generally we always use single volume for shared disks. You may connect a LUN and create a single volume for quorum.

For more information please refer to following MS articles:

Requirements and recommendations for quorum configurations

http://technet.microsoft.com/en-us/library/cc770620(v=ws.10).aspx#BKMK_requirements

Overview of the quorum in a failover cluster

http://technet.microsoft.com/en-us/library/jj612870.aspx#BKMK_overview

Failover Cluster Step-by-Step Guide: Configuring the Quorum in a Failover Cluster

http://technet.microsoft.com/en-us/library/cc770620(v=ws.10).aspx

Hope this helps!

If you are TechNet Subscription user and have any feedback on our support quality, please send your feedback here.

Lawrence

TechNet Community Support

Monday, December 31, 2012 10:15 AM

Hi,

Sorry for the delayed response - holidays and all that.

Thanks for your responses but they're not relevant to the question asked: the cluster isn't created yet because of the disk validation errors, so configuration of the quorum isn't important. The fact that my disk manager has a partitioned volume on the LUN is a red herring - this warning occurrs regardless of whether the disks have volumes or not, it's the List Potential Cluster Disks validation test that is giving me concern. I've gone ahead and removed the volumes anyway to prove the point and I get exactly the same results.

For whatever reason, both of my nodes can mount the LUN vis iSCSI without issue but the report says that it is only visible from one half of the cluster - even though it says that for BOTH servers, and I'm given the option to go ahead and create the cluster anyway after the tests complete. For obvious reasons I want the storage tests to complete successfully, rather than with a warning, and I don't want to just blindly continue.

Can anyone shed light on the matter at all? It's got me somewhat confused!

Monday, December 31, 2012 10:39 AM

Hi Adam,

I know you mention using a Dell server in your implementation, but are you using a layer of virtualization -such as Hyper-V, VirtualBox or VMWare?

Regards,

Mark Broadbent.

Contact me through (twitter|blog|SQLCloud)

Please click "Propose As Answer" if a post solves your problem

or "Vote As Helpful" if a post has been useful to you

Watch my sessions at the PASS Summit 2012

Monday, December 31, 2012 10:41 AM

Hi,

Yes, the purpose of the cluster will be to host Hyper-V VMs, and will make use of LM.

Monday, December 31, 2012 11:50 AM

It's moved on to a different error now, so I'm going to take a closer look at my iSCSI settings - thanks for that! If it turns out to be solely the iSCSI, I'll mark it as the answer.

Wednesday, January 2, 2013 6:59 AM

Hi,

I’d like to confirm what is current situation, have you resolve this issue or find the root issue reason?

If there is anything that we can do for you, please do not hesitate to let us know, and we will be happy to help.

Lawrence

TechNet Community Support

Wednesday, January 2, 2013 12:14 PM

Mark,

Thanks for your assistance - the iSCSI config wasn't at fault after all.

I went ahead and created the cluster anyway, then brought the disks online after and re-ran the validation tests - the tests went through with no issues at all (except a warning about multiple network paths via my iSCSI SAN - odd that.)

Happy New Year!

Wednesday, January 2, 2013 1:49 PM

Good news. Yeah it is sometimes just best to get the cluster live then at least you aren't in that in-between state. Happy clustering and New Year to you too sir!

Regards,

Mark Broadbent.

Contact me through (twitter|blog|SQLCloud)

Please click "Propose As Answer" if a post solves your problem

or "Vote As Helpful" if a post has been useful to you

Watch my sessions at the PASS Summit 2012