Including Data in a SQL Server Database Project

In many cases a database needs to be deployed with data pre-populated in some tables. Loading static data into tables that are referenced from other tables is a common case – examples might be customer types, address types, data states, etc. In some cases these data values will never change, in other cases they may be initial values that can be added to by applications. This class of data is variously called reference data, seed data, domain data or static data. Regardless of what you call it, this data must be present before the database can be used. We can think of this as extending the contract between your database project and the database to include deployment of data as well as schema.

SSDT uses a declarative approach to defining schema, which has the advantage that it is idempotent - you don’t need to concern yourself with the state of the target database when you deploy your design. Ideally, the same approach would apply to deploying data, which in this context can be thought of as part of the schema – rather like enum values that are defined within application code. Ideally, SSDT would allow you to supply data values for any table as part of the definition of the table. These data values would be part of the schema model so would be validated against the table definition, included in refactoring and schema comparisons, and cause appropriate data deployment scripts to be generated. While SSDT does not support defining data in the schema yet it’s certainly on our radar.

So what to do in the meantime? Well, if you use SQL Server Database Projects you can augment the schema deployment with pre- and post-deployment scripts. While these scripts are not declarative and don’t participate in the build, they can be included in the scope of refactoring. And while these scripts are imperative there are ways to write data population scripts so that they are idempotent. This is important as you need these scripts to deploy their data regardless of the content of the tables at the time they are executed and with minimum impact on the integrity of surrounding data.

You can write a composite INSERT, UPDATE, and DELETE script operating over a temp table to do this but SQL Server 2008 added MERGE support which can be used to merge data into a target table in a more compact manner. By including an appropriate MERGE script for each reference data table in your post-deployment script you can deploy data to any number of tables declaratively. And for smaller data sets, with less than a thousand rows per table (the sweet spot for most reference data), MERGE allows you to define the data inline in the script without needing to load it into a temp table. Let’s look at an example…

Let's populate an AddressType table to be used by reference to describe the purpose of addresses held in other referencing tables (not defined).

First let's create a new SQL database project in SSDT and add the reference data table definition below to it. (You can add a new Table using the item template or write this into a build script).

CREATE TABLE [AddressType] (

[AddressTypeID] INT NOT NULL PRIMARY KEY,

[Name] NVARCHAR (50) NOT NULL,

);

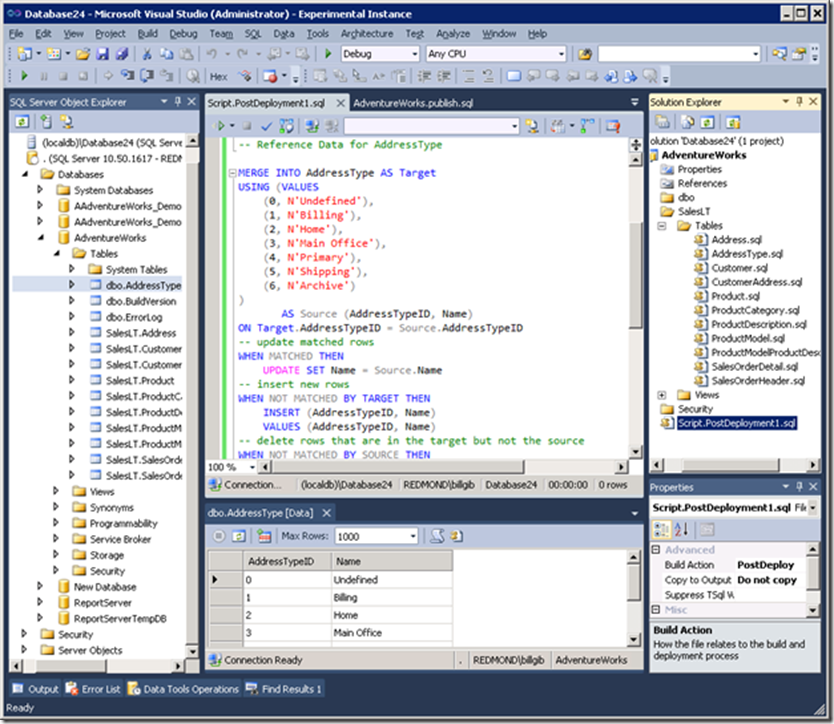

Next add a post-deployment script and copy the data population script below into it. Note the difference between the build action property value on the object definition script and the post-deployment script. You’re allowed only one active post-deployment script per project.

-- Reference Data for AddressType

MERGE INTO AddressType AS Target

USING (VALUES

(0, N'Undefined'),

(1, N'Billing'),

(2, N'Home'),

(3, N'Main Office'),

(4, N'Primary'),

(5, N'Shipping'),

(6, N'Archive')

)

AS Source (AddressTypeID, Name)

ON Target.AddressTypeID = Source.AddressTypeID

-- update matched rows

WHEN MATCHED THEN

UPDATE SET Name = Source.Name

-- insert new rows

WHEN NOT MATCHED BY TARGET THEN

INSERT (AddressTypeID, Name)

VALUES (AddressTypeID, Name)

-- delete rows that are in the target but not the source

WHEN NOT MATCHED BY SOURCE THEN

DELETE

As you can see it’s a compact syntax, with the data nicely encapsulated in one part of the statement.

The example above assumes a closed set of values, so it includes a delete clause that removes any extra rows that may have been added to the table. You might feel this is unnecessary if the table is suitably protected, but it does tighten the contract. Alternatively, you could modify this behavior if deletion is an issue or you need to cater for the possibility that extra rows may already have been referenced.

Here’s what it looks like in SSDT. The screen shot below was taken after publishing the project to the database. The post-deployment script is selected and visible in the upper tab and properties window, and the deployed data is visible in the Data Editor in the lower tab, opened by selecting View Data on the AddressType table in the SQL Server Object Explorer.

Some variations of this approach include:

- Spiking initial values into a table to which applications can add additional entries.

In this case exclude the delete clause, then the MERGE will guarantee the defined values exist but ignore others already added. - Use with a table that uses the IDENTITY clause to assign key values for application-added data.

In this case you will want to reserve a range of values for your initial data and define a starting value for the IDENTITY clause beyond that range. The example below assigns values for AddressTypeID starting at 100.

[AddressTypeID] INT IDENTITY (100, 1) NOT NULL PRIMARY KEY,

You will also need to enable identity insert as follows:

SET IDENTITY_INSERT AddressType ON

GO

-- your MERGE code goes here

SET IDENTITY_INSERT AddressType OFF

GO

You need to be aware that IDENTITY INSERT has global scope, so a database should either be taken offline or otherwise protected for the duration of the operation. - Use with reference tables that cross-reference each other.

In this case assign explicit key values from the referenced tables as foreign key values in referencing tables – just make sure to order the script so that the referenced tables are populated first. - Wrap the scripts into one or more procedures

A best-practice packaging variation is to place the script within the body of a stored procedure defined as part of the normal database schema and then execute the procedure from the post-deployment script. This has the advantage of providing additional build validation for the script – for example, if a column populated by the script is deleted from the table an error will be reported. You can then choose to either leave the procedure in the database enabling it to be run periodically to ‘refresh’ the data, or drop the procedure immediately after executing it post-deployment.

While SSDT has no built-in solution for including data as part of the schema yet, using MERGE from a post-deployment script works with all SQL Server and SQL Azure databases since SQL Server 2008, is straightforward to implement and gets the job done.

Comments

Anonymous

February 12, 2012

Good post! Thanks Bill. My two cents though: since reference data is more related to the application domain than to a database schema I would recommend keeping it within your application (inside application project) and to import during application init stage, this way you could for example populate AddressType db table from AddressType enum in your code without duplicating enum values in .sql (or .csv etc.) reference data files, also you will have an ability do update reference data more often if needed without republishing your database.Anonymous

February 21, 2012

I have to disagree with Konstantin. I don't want a developer to deploy application code and make data changes. Deployment of a database project is different from the deployment of an application project. For one thing, you want to back up the database(s) before applying a data change. The database project has a built-in deployment option to make sure that happens, while an application project doesn't. I also run database unit tests as a step in the automated deployment of our database solution. Each of my database projects has an assocated test project containing the database unit tests for the database that will be deployed using that project. Keeping the reference data with the database project is a natural extension of this process. Everything relating to the database is contained in one location. I don't want to have to hunt through application code to make a simple data change.Anonymous

July 26, 2012

How would you handle large amounts of data like over 5GB or 10GB or reference data?Anonymous

September 12, 2013

The comment has been removedAnonymous

September 16, 2013

I would have to agree with Markus. There should be a better way. I hate to say it, but Red-Gate Database Source Control tool has a nice way of including your reference data and then at deployment time determining what needs to happen. Something like that would be nice. Let's hope that with the release of Visual Studio 2013, we might see some improvement to this tooling.Anonymous

September 16, 2013

And the Merge option is only available in SQL Server 2008 and up. I'm still stuck on SQL Server 2005.Anonymous

March 12, 2014

Awesome, best solution for such task I have found so far.Anonymous

May 12, 2014

Another Variation that will let you use the insert syntax and separate the merge logic from your data: Create one post-deployment file per table that you want to populate with seed data '---- Checking for existence of temporary table; If table is found then drop it ----------------------- IF OBJECT_ID('#DataLoad_MySeedTable1') IS NOT NULL DROP TABLE #DataLoad_MySeedTable1 '---- Create temporary table structure to mimick the original table that you want to populate with data -------- '----- 1=2 in the where clause will restrict from copying any data but the temporary table will be created with the same structure as the original table ------- INSERT INTO #DataLoad_MySeedTable1 SELECT * FROM MySeedTable1 WHERE 1 = 2 '------ Start inserting data into the temporary table -------------------------------------------------------- INSERT INTO #DataLoad_MySeedTable1 VALUES (row1col1value, row1col2value,...), (row2col1value, row2col2value,...), (row3col1value, row3col2value,...); .... In another post-deployment file place the logic associated with all the data merging from temporary table to the main table. This is a common post deployment script and include the merge logic for all the seed tables: '------------- Merging data from Temporary table DataLoad_MySeedTable1 into MySeedTable1 ---------------- MERGE INTO MySeedTable1 AS Target USING (SELECT PKCOL1, COL2..... FROM #DataLoad_MySeedTable1 ) AS Source (PKCOL1, COL2...) ON Target.PKCOL1 = Source.PKCOL1 -- update matched rows WHEN MATCHED THEN UPDATE SET COL2 = COL2 -- insert new rows WHEN NOT MATCHED BY TARGET THEN INSERT (PKCOL1, COL2...) VALUES (PKCOL1, COL2...) -- delete rows that are in the target but not the source WHEN NOT MATCHED BY SOURCE THEN DELETE '------------- Merging data from Temporary table DataLoad_MySeedTable2 into MySeedTable2 ---------------- ... This approach separates the merging logic from the data; each seed table's data will reside in one file making it easy to modify. Happy Coding, VDAnonymous

June 01, 2014

@VD: I agree with your approach, and maybe I missed something but my understanding is that you can have ONLY ONE post deployment script per VS project.Anonymous

August 02, 2014

Thanks for this! I was looking for exactly how to do this and your article helped me greatly. Thanks!Anonymous

August 13, 2014

I would have to say, we certainly used a lot of post -deployment scripts with our customers. However, as an application grows, different needs emerge. The examples of such needs:

when you have a LOT of objects and some of them have 1000 records of reference/static/master data

when your reference data has to change to be in-synch with other application ( not due to the schema change) and builds frequency prevents manual changes

when you need to take data from other production applications and/or files - and for example subset it or mask it.

When you want some data validation at the end of the build process with the workflow - so that you can delete test data. In these cases, old nice SSIS package included into the build in post-deployment script with the DTEXEC works just fine. In case of masking, there are solutions integrating into the SQL Server/SSIS and they not only bring data from production environment - they mask it along the way and integrate into the cycle. Granted, Red Hat has it and embarcadero has it, and informatica has it ( if you have money for it , of course) yet, integration with the complete development lifecycle in Microsoft stack has much better outcomes. We presented the webinar on this topic - if you are interested, ask us at [email protected] for access to it or attend one of our sessions at SQL Saturday in your area.

- Anonymous

November 01, 2016

The comment has been removed

- Anonymous

Anonymous

October 08, 2014

Is there a way to generate a MERGE script from an existing table? I have not found any way to do this with the script generator in Management Studio - I only get it to create INSERT scripts, and these are not easily converted to MERGE. I hope I have overseen something...Anonymous

November 10, 2014

The comment has been removedAnonymous

November 24, 2015

@Knut Tveitane: You may want to look at the following: github.com/.../master.dbo.sp_generate_merge.sql With some slight modifications you can adapt it for your needs perfectly to get your initial Merge Scripts. It has some traps. For Example you should consider to set the max number of returned columns (characters) in the resultset option higher that default in Management Studio Query options (Options / Result / Text). @Everyone, especially SSDT Team: I would be really suprised if there is still NO PROGRESS on those concerns and questions? Maybe anyone official could give us a statement here? TYAnonymous

February 11, 2019

When this article was first written, the comment "While SSDT does not support defining data in the schema yet it’s certainly on our radar." sounded encouraging. Now, 7 years later (!) I wonder whether the radar is still working properly? (;