Hi Akshay Patel

Please find configuration details and minimum requirements for implementations.

To complete the steps in this article, you need the following resources:

- An Azure subscription. If you don't have an Azure subscription, create a free account.

- A database in Azure SQL Database. For details, see Create a single database in Azure SQL Database.

- A firewall rule allowing your computer to connect to the server. For details, see Create a server-level firewall rule.

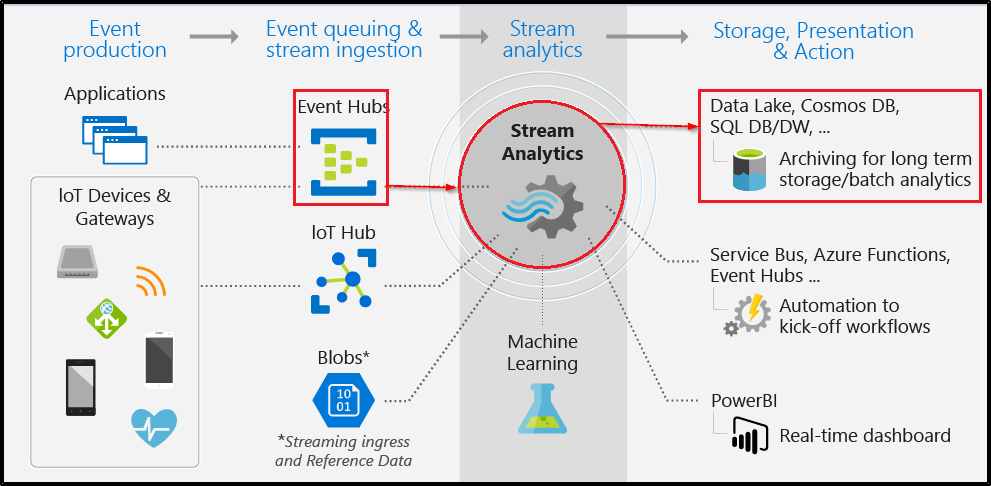

Configure Stream analytics integration

- Sign in to the Azure portal.

- Navigate to the database where you want to ingest your streaming data. Select Stream analytics (preview).

- To start ingesting your streaming data into this database, select Create and give a name to your streaming job, and then select Next: Input.

- Enter your events source details, and then select Next: Output.

- Input type: Event Hub/IoT Hub

- Input alias: Enter a name to identify your events source

- Subscription: Same as Azure SQL Database subscription

- Event Hub namespace: Name for namespace

- Event Hub name: Name of event hub within selected namespace

- Event Hub policy name (Default to create new): Give a policy name

- Event Hub consumer group (Default to create new): Give a consumer group name

- Select which table you want to ingest your streaming data into. Once done, select Create.

Username, Password: Enter your credentials for SQL server authentication. Select Validate.

- Table: Select Create new or Use existing. In this flow, let's select Create. This will create a new table when you start the stream Analytics job

- A query page opens with following details:

Your Input (input events source) from which you'll ingest data

Your Output (output table) which will store transformed data

Sample SAQL query with SELECT statement.

- Input preview: Shows snapshot of latest incoming data from input

- After you're done authoring & testing the query, select Save query. Select Start Stream Analytics job to start ingesting transformed data into the SQL table. Once you finalize the following fields, start the job.

- Once you start the job, you'll see the Running job in the list, and you can take following actions:

Start/stop the job: If the job is running, you can stop the job. If the job is stopped, you can start the job.

Edit job: You can edit the query. If you want to do more changes to the job ex, add more inputs/outputs, then open the job in Stream Analytics. Edit option is disabled when the job is running.

Preview output table: You can preview the table in the SQL query editor.

- Open in Stream Analytics: Open the job in Stream Analytics to view monitoring, debugging details of the job.

Please refer the Microsoft official documentation for more details and configuration steps.

Hope this helps. Do let us know if you any further queries.