@eoa - Thanks for the question and using MS Q&A platform.

Every Azure Synapse workspace comes with a default quota of vCores that can be used for Spark. The quota is split between the user quota and the dataflow quota so that neither usage pattern uses up all the vCores in the workspace. The quota is different depending on the type of your subscription but is symmetrical between user and dataflow. However if you request more vCores than are remaining in the workspace, then you will get the following error: InvalidHttpRequestToLivy: Your Spark job requested 12 vcores. However, the workspace has a 0 core limit. Try reducing the numbers of vcores requested or increasing your vcore quota. HTTP status code: 400..

As per the error message, the number of V-cores are exhausted on the spark session. When you define a Spark pool, you are effectively defining a quota per user for that pool.

The vcores limit depends on the node size and the number of nodes. To solve this problem, you have to reduce your usage of the pool resources before submitting a new resource request by running a notebook or a job.

(or)

Please scale up the node size and the number of nodes.

To resolve this issue, you need to request a capacity increase via the Azure portal by creating a new support ticket.

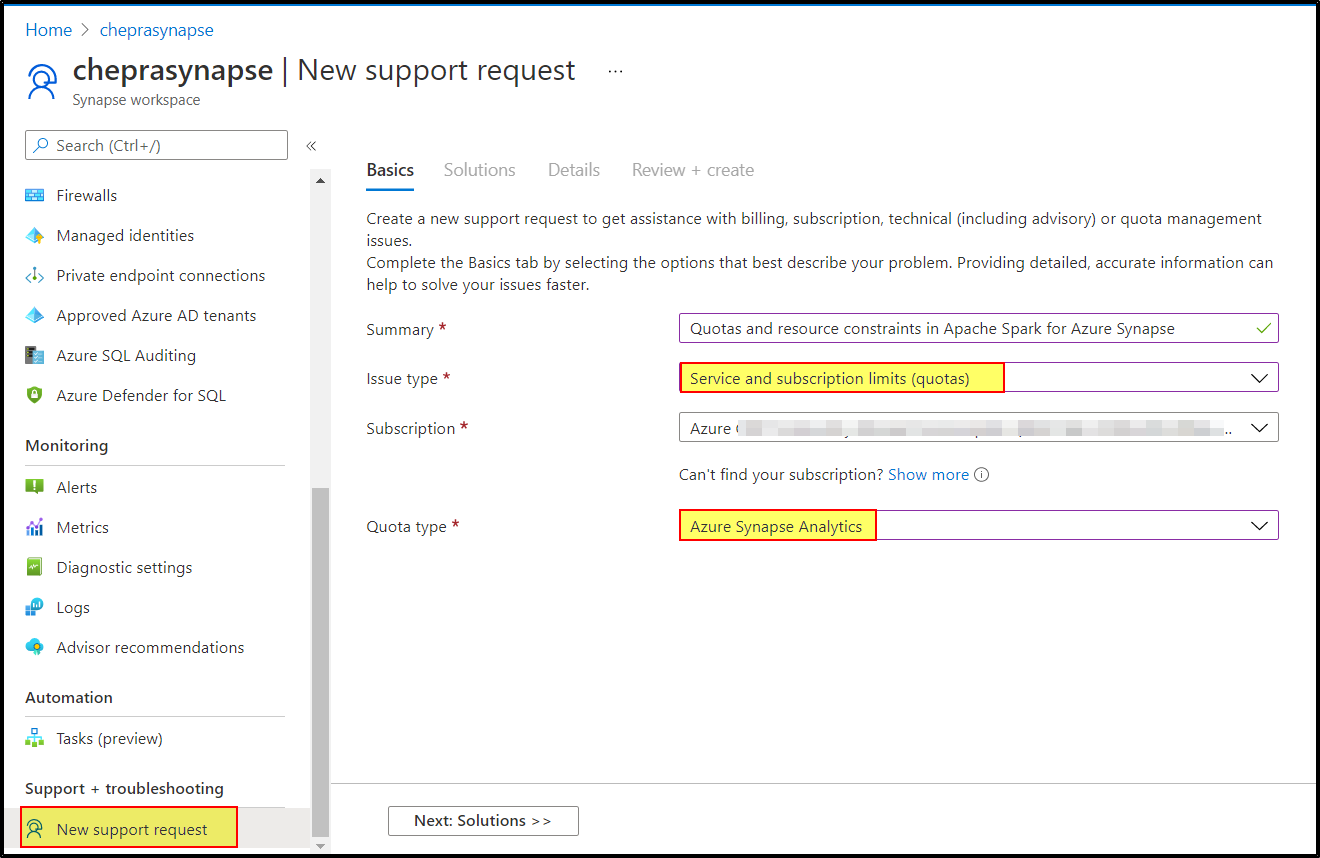

Step1: Create a new support ticket and select issue type as Service and subscription limits (quotas) and quota type as Azure Synapse Analytics.

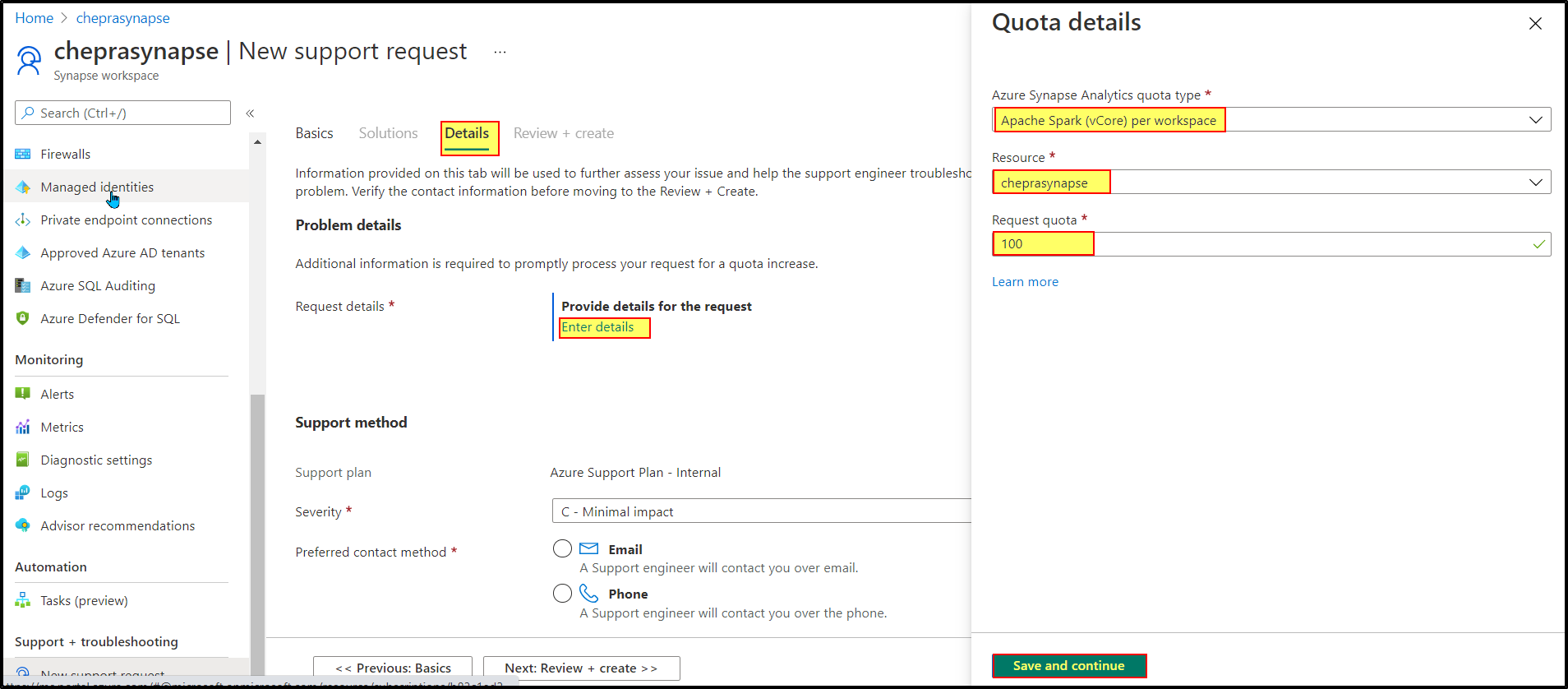

Step2: In the Details tab, click on Enter details and choose quota type as Apache Spark (vCore) per workspace , select workspace, and request quota as shown below.

Step3: Select support method and create the ticket.

For more details, refer to Apache Spark in Azure Synapse Analytics Core Concepts.

Hope this helps. Do let us know if you any further queries.

If this answers your query, do click Accept Answer and Yes for was this answer helpful. And, if you have any further query do let us know.